LIO-SAM源码解析(五):mapOptmization.cpp

1. 代码流程

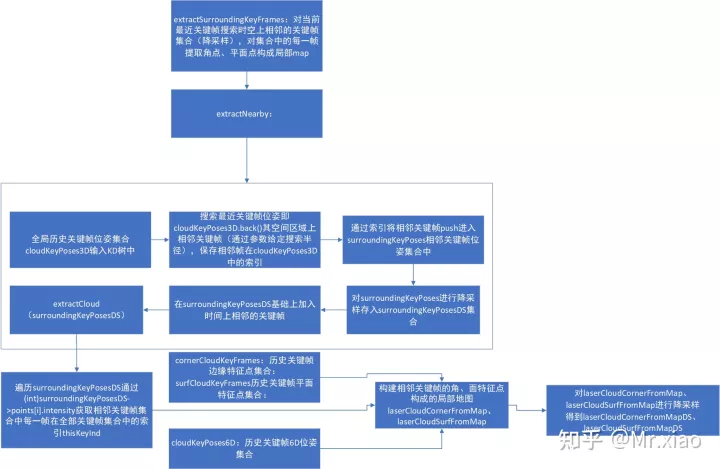

1.1. extractSurroundingKeyFrames()

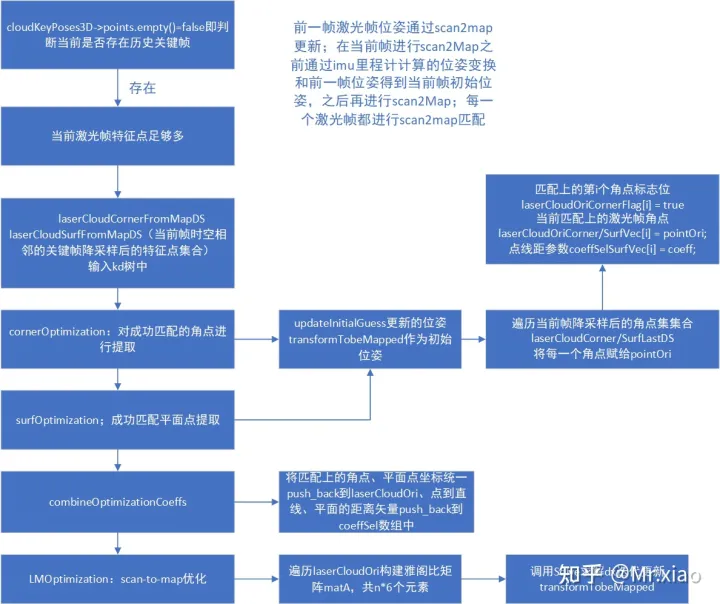

1.2. scan2MapOptimization()

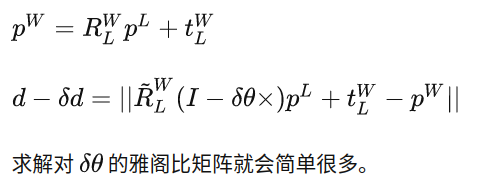

这个函数主要就是进行帧到地图的匹配,通过点到面、点到线的距离距离最小作为优化目标。LOAM中雅阁比矩阵推导其实还是过于复杂了,可以使用进行误差扰动来计算雅阁比矩阵,姿态使用失准角(李代数)模型推导更为简单

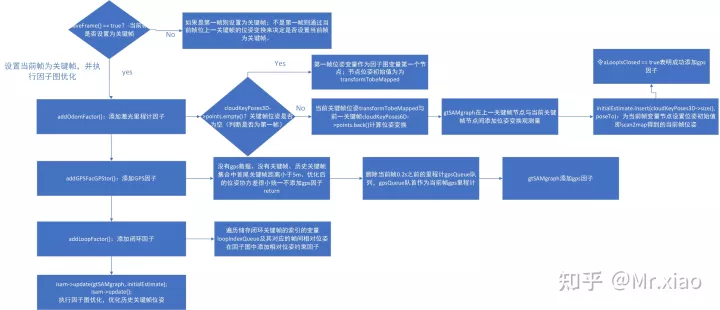

1.3. saveKeyFramesAndFactor()

在这个函数一直维持着一个关键帧节点构建的图,将GPS位置、关键帧间相对位姿、闭环关键帧间相对位姿(两帧的点云匹配得到)作为观测值,对关键帧位姿进行优化,在没有GPS情况下,精度主要靠回环。

利用当前关键帧scan2map匹配位姿和上个关键帧优化后的位姿(这一次优化的初值)来计算关键帧间相对位姿观测量,实际上这个观测量对位姿起不到优化的作用。

2. 功能说明

2.1. 第一个巨大的回调函数:lasercloudinfoHandle

这个函数监听的是/feature/cloud_info,关于这个话题里面包含的内容,我已经在featureExtraction.cpp的总结部分说过了。这里回忆一下,cloud_info是作者自定义的一个特殊的msg类型,包含了当前激光帧的角点集合,平面点集合,一维数组,每根线开始的点和结束点在一维数组中的索引……

那么收到数据,先保存时间戳,然后提取已经在featureExtraction.cpp中被提取的角点和平面点信息,保存到*laserCloudCornerLast和*laserCloudSurfLast中。请记住这两个命名。

频率控制:当前时刻-上一时刻>=0.15s时,才开始下列循环:

2.1.1. UpdateInitGuess:当前帧初始化

当关键帧集合为空时,用激光帧的imu原始数据角度数据初始化,并且把数据用lastImuTransformation保存(记住这个命名),返回。

此时推荐回顾一下imageProjection.cpp部分的总结3.2

假如cloudInfo.odomAvailable=true时,那么就用一个transBack来记录cloudInfo.initialGuessX等信息,(这个信息其实来自于imupreintegration.cpp中发布的imu里程计数据),然后记录增量,在之前系统状态transformTobeMapped的基础上,加上这个增量,再次存入transformTobeMapped。 注意这个transformTobeMapped,这个数据结构,在这个cpp里,我们可以理解为就是它在存储着激光里程计部分的系统状态,包括位置与姿态。

注意,这里有一个lastImuPreTransformation,这个用来保存上一时刻imu里程计的数据,根据它和当前的imu里程计来算增量。不要和lastImuTransformation变量混起来,虽然这俩变量名字长的很像。

然后覆盖lastImuTransformation(在这个case里没用到),返回;

假如cloudInfo.imuAvailable=true,那么进入这个case:

注意,lastImuTransformation在1.1.1和1.1.2中并未用到,只是不断的在替换成最新数据。当cloudInfo.odomAvailable一直是true的时候,程序压根也不会进入到这个case。

但是,凡事总有例外,万一哪里没有衔接好,imu里程计没有及时算出来,那么就导致此时激光帧的位姿没有一个初始化的数据(我们之后是要在初始化的基础上进行优化的),那么之后的优化就无从进行。因此,就要用到这个case。

这里主要思路是用imuRollInit数据来初始化,如果你回顾过imageProjection.cpp部分的总结3.2 那么你应该就会懂,这里的数据来源是原始imu数据的角度信息。那么如果这里有数据,就用lastImuTransformation当成最新的上一时刻数据,当前数据transBack和它算一个增量,然后累积到系统值transformTobeMapped上面去。最后更新覆盖lastImuTransformation,返回。

2.1.2. extractSurroundingKeyFrames

这个是比较复杂的一个函数,以下的内容希望读者可以心平气和的,每个字都依次念一遍。

如果没有关键帧,那就算了,返回;

如果有,就调用extractNearby函数。

关键帧是啥?cloudKeyPoses3D,我们要记住这个变量,虽然到现在为止,我们还不知道它是怎么来的,但是这个东西是怎么获取的,我们在后续必须弄明白。

在这里我先剧透一下:它里面装的是历史的“关键帧”的位置,即x,y,z三个值。需要明确:这里装的绝不是历史的关键帧位置处的点云。而是历史关键帧记录时刻机器人所在的位置。

同理还有一个cloudKeyPoses6D,它比这个3D还多了三个角度信息。之所以要用一个6D一个3D分别来装关键帧,我现在直接揭晓答案:用3D装,是因为我们要根据这个来构建KD树,搜索历史上最近的节点。“最近”指的是距离上最近,即xyz空间坐标最近,和角度无关。而cloudKeyPoses6D,是用来投影点云的,把当前帧点云投影到世界坐标系下,那么投影就必须要用角度信息了,所以作者分别用了一个3D和一个6D来装数据。

Kd树的原理我这里不写,随便放一个链接:机器学习——详解KD-Tree原理 - 知乎 ,实际上代码里也只是调库,所以这里我不写。

2.1.3. extractNearby函数

使用kd树,搜索当前最近的关键帧,在给定半径内(默认是50m)所有关键帧最近的位置,并把结果返回到pointSearchInd,把距离返回到pointSearchSqDis中。

根据索引pointSearchInd,把相邻关键帧存入到surroundingKeyPoses中。

下采样,装进surroundingKeyPosesDS中,并在原始的surroundingKeyPoses其中找到最近的一个点,替换掉索引。(关于这个,我的理解是,下采样后不太准确了,好几个不同的关键帧可能因为下采样的原因混成了一个,所以要用原始数据对索引进行一个修正,这样以后才方便根据索引投影点云)

顺手把10s内的关键帧cloudKeyPoses3D中的位置也加入到surroundingKeyPosesDS中。

extractCloud:提取边缘和平面点对应的localmap,把surroundingKeyPosesDS传入到函数中:

对输入的surroundingKeyPosesDS进行遍历,找到50m之内的位置,然后用transformPointCloud把对应位置的点云,进行变换到世界坐标系下。

如何变换呢?根据上面提到的cloudKeyPoses6D的位姿,然后把cornerCloudKeyFrame和surfCloudKeyFrame中根据索引找到点云,投影到世界坐标系下。

那么在这里,cornerCloudKeyFrame和surfCloudKeyFrame是什么?之前从来没有出现过。我这里同样进行剧透,它里面存放的是下采样的点云。注意总结1中的*laserCloudCornerLast和*laserCloudSurfLast这两个东西,这是瞬时点云,这个东西会在之后被下采样,然后装入cornerCloudKeyFrame中。

在角点点云和平面点点云被投影到世界坐标系下后,会被加入到laserCloudCornerFromMap和laserCloudSurfFromMap等数据结构中,然后再合出一个pair类型的Container<关键帧号,<角点集合,平面点集合>>。

2.1.4. downsampleCurrentScan

这部分比较简单,就是对最外层的回调函数中的laserCloudCornerLast之类的东西,进行一个下采样,保存到laserCloudCornerLastDS这些以DS结尾的数据结构中,并且把数目存到laserCloudCornerLastDSNum这种以DSNUM结尾的数据结构中。其实就是代表了当前帧点云的角点/平面点的下采样集合,和数目值。

2.1.5. Scan2MapOptimization

这个函数是本cpp中第二复杂的函数。我们现在把它展开。

首先,没有关键帧保存,那就返回,不处理;

如果DSNUM这种记录角点和平面点的数据结构中,发现数目不够多,也不处理;只有在数目足够多的时候才进行处理,默认最少要10个角点,100个平面点。

迭代30次:

边缘点匹配优化:CornerOptimization

平面点匹配优化:SurfOptimization

组合优化多项式系数:combineOptimizationCoeffs

LMOptimization判断迭代误差是否足够小,如果是true则认为迭代完成,返回;

transformUpdate:原始的imu的rpy,在这里和优化后的激光里程计位姿进行一个加权融合。 接下来,我们依次展开这些函数:

边缘点匹配优化:CornerOptimization

把系统状态transformTobeMapped做一个数据格式转换,变成transPointAssociateToMap形式

从当前角点下采样集合laserCloudCornerLastDS进行遍历,找到世界坐标系下最近的5个点,要求小于1m。

求5个样本的均值,协方差矩阵。对协方差矩阵进行特征值分解,如果最大特征值大于次大特征值的3倍,那么就认为构成线。

一旦发现构成线,那么就在均值沿着最大特征向量方向(把它看成线的方向)前后各取一个点(+-0.1 x 方向)。

X0为当前点,X1和X2为“X0附近的5个点一起算出的均值沿方向前后各取的一点”,叉乘计算三点面积a012,x1x2底边长度l12。然后再做一次叉乘,得到X0距离x1,x2连线的垂线段的单位方向向量(la,lb,lc)。并计算点到直线的距离ld2=a012/l12。

用一个鲁棒和函数,使得距离ld2越大,s越小。然后用coeff来保存“鲁棒后”的距离,和“鲁棒后”的点到线的垂线的方向向量。

如果点到直线的距离小于1m,那么存入数据结构,laserCloudOriCornerVec为原始点云,coeffSelCornerVec为鲁棒距离和鲁棒向量,laserCloudOriCornerFlag代表当前点X0 已经找到对应的线。

思考:为什么要加入方向向量呢?是因为这个在优化的偏导数中会被用到。

平面点匹配优化:SurfOptimization

和上面的同理,对系统状态量transformTobeMapped进行数据格式转换;

从当前角点下采样集合laserCloudSurfLastDS进行遍历,找到世界坐标系下最近的5个点,要求小于1m。

直接用matA0存储这个5个点,求解Ax+By+Cz+1=0的ABC系数(用QR分解)

然后对ABCD,代码中为pa,pb,pc,pd=1进行单位化。

根据点x0到平面的距离d=d=\frac{|Ax_0+By_0+Cz_0+D|}{\sqrt{A^2+B^2+C^2}} (分母为1)判断是否构成平面。如果有一个大于0.2m则不构成。

pd2为点到平面的距离,也用鲁棒和函数处理,并且比上两次开方(这点我不理解,我猜就是用来鲁棒的,换成1次开方可能也差不多,意义或许不大),然后和角点部分类似,得到s,存入数据结构。

组合优化多项式系数:combineOptimizationCoeffs

这个比较简单,就是把CornerOptimization和SurfOptimization中已经确定匹配关系的点提取出来,laserCloudOri统一把角点和平面点装在一起,coeffSel统一装之前计算得到的“鲁棒优化向量”(角点就是点到直线的“鲁棒垂线”,平面点就是点到平面的“鲁棒法线”)。

优化向量会在LMOptimization中进行优化。

LMOptimization判断迭代误差是否足够小,如果是true则认为迭代完成,返回。

这一部分大内容,主要麻烦在原理上面。

这里推荐一个阅读:LIO-SAM-scan2MapOptimization()优化原理及代码解析

这个文章中公式写的非常好。我就不照搬了。

另外在推导部分,可以仔细研究一下这篇文章:

LeGO-LOAM中的数学公式推导

虽然是Lego-loam的推导,但是Lego-loam和lio-sam在这部分的原理是一样的,因此可以通用。看完这篇文章,就能理解1.4.2.3中我提到的“优化向量”是干啥用的。

总之,照着原理,构建JtJ*delt_x=-JTf,然后构建MatAtA,matAtB,利用cv:solve提供的QR分解,得到matX,即delta_x。

当特征点缺乏时,状态估计方法会发生退化。特征值小于阈值,则视为退化方向向量。这块的理论,可以参考LOAM SLAM原理之防止非线性优化解退化

更新位姿,判断收敛与否。那么真正的雷达里程计系统状态transformTobeMapped,就是在这里被更新。

1.4.3 transformUpdate:原始的imu的rpy,在这里和优化后的激光里程计位姿进行一个加权融合。

当imuAvailable=True的时候,并且俯仰角<1.4,那么对位姿和原始imu的rpy做一个加权平均,(权重在配置文件中可以被设置为0.01)。主要是对roll,pitch仅加权平均,并且对z进行一个高度约束(也就是clip,不得超过配置文件中的z_tollerance,这个主要是一个小trick,应对不能飞起来的无人小车用的),更新transformTobeMapped。

好了,那么 现在回到回调函数的主流程:

1.5 saveKeyFramesAndFactor:之前函数二话不说就用了一些并没有出现过的数据结构,例如什么cloudKeyPoses3D,cornerCloudKeyFrame之类的东西,看完这个函数将明白这些变量是怎么来的。

1.5.1 saveFrame:计算当前帧和前一帧位姿变换,如果太小不设关键帧。默认是角度<0.2,距离<1m,两者不满足其一就不保存;

1.5.2 addOdomFactor:

这个是要加入激光里程计因子,给图优化的模型gtSAMgraph。在1.5之前别的函数里,如果没有关键帧,直接就跳过了。但是这里不能跳过。

如果暂时还没有关键帧,就把当前激光系统状态transformTobeMapped,打包成一个PriorFactor加入到gtSAMgraph里。如果目前已经有关键帧了,就把最后一个关键帧,和当前状态transformTobeMapped计算一个增量,把这个增量打包成一个BetweenFactor,加入到gtSAMgraph里头去。

initialEstimate代表变量初值,用transformTobeMapped赋值。

1.5.3 addGpsFactor:

GPS的筛选规则为:如果没有GPS信息,没有关键帧信息,首尾关键帧小于5m,或者位姿的协方差很小(x,y的协方差阈值小于25),就不添加GPS。

否则,遍历GPS列表,当前帧0.2s以前的不要,或者GPS的协方差太大了也不要,无效数据也不要…… 找到正常数据,打包成一个gps_factor,加入gtSAMgraph里面。

1.5.4 addLoopFactor:

这个其实和当前的回调函数无关,因为当前回调函数监听的是/feature/cloud_info信息,回环是由其他线程监控和检测的。那么在这里,它查询回环队列,加入回环因子,就是一个顺手的事情,反正现在要更新优化,那么查一下,如果有候选的等在那里,就顺手加入优化。如果用做饭来比喻这件事,那么另外的回环检测的线程就是相当于另一个人在备菜,这里addLoopFactor相当于是在炒菜,备好了就先炒,没有备好就算了。

1.5.5 gtsam正常更新。如果有回环那就多更新几次。

1.5.6 把cloudKeyPoses3D,cloudKeyPoses6D,分别装上信息,cloudKeyPoses3D代表关键帧的位置,cloudKeyPoses6D代表关键帧的位置+姿态,为什么要有一个3D一个6D呢?6D里不已经包含了3D信息吗?这个问题我在1.2处已经解释过了。

1.5.7 用优化结果更新transformTobeMapped。

1.5.8 cornerCloudKeyFrames,surfCloudKeyFrames装入信息,回顾一下,回调函数开头收到的点云数据为laserCloudCornerLast,laserCloudSurfLast,然后在downsampleCurrentScan处这俩信息被下采样,加上了DS后缀。在这里把它装到cornerCloudKeyFrames和surfCloudKeyFrames中。

(回顾:cornerCloudKeyFrames代表关键帧位置处的角点点云,surfCloudKeyFrames代表关键帧位置处的平面点点云。这俩东西就是上面1.2处extractSurroundingKeyFrames用到的内容,cornerCloudKeyFrames通过cloudKeyPoses6D变换到世界系下,被存到laserCloudCornerFromMap里面,这个FromMap又在scan2MapOptimization函数中被设置到kdtreeCornerFromMap这个Kd树里,在cornerOptimization函数里,就是把当前帧的激光点云依据1.1的初值transformTobeMapped,变换到世界坐标系下,再用kdtreeCornerFromMap进行kd搜索,建立匹配关系,优化transformTobeMapped。)

1.5.9 updatePath,更新里程计轨迹。把cloudKeyPoses6D传入,保存在globalPath中。不过暂时还没有进行发布。

1.6 correctPoses:

如果发现回环的话,就把历史关键帧通通更新一遍。我们刚刚在1.5.5里面虽然更新过了,但是结果都是保存在gtsam里面的,cloudKeyPoses3D和cloudKeyPoses6D,这俩保存位置和位姿的变量仍然保留着更新前的关键帧位姿。所以就根据更新结果,把他俩更新一遍。

为什么不更新cornerCloudKeyFrames和surfCloudKeyFrames呢?因为没有必要更新,这俩存的是机器人坐标系下的点云,和机器人在世界系下的位姿是无关的。

1.7 publishOdometry:

到此为止,激光里程计部分的transformTobeMapped就不再更新了。回顾一下transformTobeMapped经历了哪些变换:在1.1部分用imu角度初值或是imu里程计初值赋值,然后在scan2mapOptimization里面根据点到线、点到面的方程进行更新,再在transformUpdate里和原始imu的rpy信息进行一个很小的加权融合(不过这一步我觉得没啥大用),最后在saveKeyFrameAndFactor里面再加入GPS因子和回环因子进行一轮优化。

最后把transformTobeMapped发布出去,其他cpp文件里,接收的“激光里程计”就是这么个东西。也就是lio_sam/mapping/odometry_incremental.

1.8 publishFrames:

这个纯粹就是把乱七八糟东西都发布出去,不管有没有用。如果用户需要就可以监听它。

1.8.1发布关键帧位姿集合,把cloudKeyPoses3D发布成lio_sam/mapping/trajectory

1.8.2发布局部降采样平面点,把laserCloudSurfFromMapDS(历史默认50m内的点,在extractCloud中被设置),发布为lio_sam/mapping/map_local

1.8.3发布当前帧的下采样角点和平面点,用优化后的激光里程计位姿transformTobeMapped投影到世界系下发布,/lio_sam/mapping/cloud_registered

1.8.4发布原始点云经过配准的点云:输入的/feature/cloud_info的cloud_deskewed字段是由featureExtraction.cpp发布的,其cloud_deskewed是源于imageProjection.cpp发布的原始去畸变点云。把它发布到世界坐标系下,然后以/lio_sam/mapping/cloud_registered_raw的形式发布。

1.8.5发布轨迹,把1.5.9里装好在globalPath里面但是还没有发布的轨迹发布出去,名为/lio_sam/mapping/path。

那么到现在,基本上mapOptimization.cpp的内容就结束了,但是还有一些尾巴:

2.gpshandle:监听GPS数据,保存到GPS队列里。

3.loopinfohandle:监听"lio_loop/loop_closure_detection",订阅来自外部闭环检测程序提供的闭环数据,本程序没有提供,这里实际没用上。

4.loopClosureThread:这个线程在主函数里单独开了一个线程,简要说一下:

4.1 读取配置文件中是否开启回环检测。

4.2开始无限循环:

4.2.1 performLoopClosure:

在历史帧中搜索距离关键帧最近,时间间各较远的关键帧(默认是30s以外,15m以内)没找到就返回,如果找到了,结果就放在loopKeyPre当中,loopKeyCur保存最近一个关键帧。

把最近一个关键帧的特征点提出来,放入cureKeyframeCloud里;回环候选帧前后各25帧也提取出来,放入prevKeyframeCloud里。

把prevKeyframeCloud发布出去,名为lio_sam/mapping/icp_loop_closure_history/cloud

调用pcl库的icp轮子,设定阈值,参数,用setInputSource,setInputTarget传入两个点云,用align对齐。成功阈值设定为0.3,成功则存在icp.getFinalTransformation里面。把当前关键帧的点云,用这个结果icp.getFinalTransformation,转换以后,以lio_sam/mapping/icp_loop_closure_corrected_cloud发布出去。

把当前帧的的位姿用icp.getFinalTransformation结果校正一下,把pair<当前,回环>,间隔位姿,噪声用队列存起来,等待addLoopFactor来调用,即上面的1.5.4部分。

4.2.2 visualizeLoopClosure:

这部分内容没啥好说的,就是用于rviz展示,把关键帧节点和二者的约束用点和线连起来,以lio_sam/mapping/loop_closure_constraints发布出去。

5. 最后一个线程,visualizeGlobalMapThread:

这个主要是两块内容:

5.1 publishGlobalmap:把当前关键帧附近1000m(默认)的关键帧找出来(其实也就是全局的了),降采样,变换到世界系下,然后发布为lio_sam/mapping/map_global.

5.2 saveMapService:这个用来保存pcd格式的点云地图。在配置文件中可以设置开启与否,和存储位置。注意,当程序结束时,ctrl+c以后,才会启动保存任务。这个部分的代码,和发布globalmap部分的核心内容基本一致,反正就是把cornerCloudKeyFrames,surfCloudKeyFrames用cloudKeyPoses6D变换到世界系下,分别保存角点pcd和平面点pcd,以及全局(合起来)的pcd文件。

3. 代码

#include "utility.h"

#include "lio_sam/cloud_info.h"

#include "lio_sam/save_map.h"#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include #include using namespace gtsam;using symbol_shorthand::X; // Pose3 (x,y,z,r,p,y)

using symbol_shorthand::V; // Vel (xdot,ydot,zdot)

using symbol_shorthand::B; // Bias (ax,ay,az,gx,gy,gz)

using symbol_shorthand::G; // GPS pose/*** 6D位姿点云结构定义

*/

struct PointXYZIRPYT

{PCL_ADD_POINT4D PCL_ADD_INTENSITY; float roll; float pitch;float yaw;double time;EIGEN_MAKE_ALIGNED_OPERATOR_NEW

} EIGEN_ALIGN16; POINT_CLOUD_REGISTER_POINT_STRUCT (PointXYZIRPYT,(float, x, x) (float, y, y)(float, z, z) (float, intensity, intensity)(float, roll, roll) (float, pitch, pitch) (float, yaw, yaw)(double, time, time))typedef PointXYZIRPYT PointTypePose;class mapOptimization : public ParamServer

{public:// gtsamNonlinearFactorGraph gtSAMgraph;Values initialEstimate;Values optimizedEstimate;ISAM2 *isam;Values isamCurrentEstimate;Eigen::MatrixXd poseCovariance;ros::Publisher pubLaserCloudSurround;ros::Publisher pubLaserOdometryGlobal;ros::Publisher pubLaserOdometryIncremental;ros::Publisher pubKeyPoses;ros::Publisher pubPath;ros::Publisher pubHistoryKeyFrames;ros::Publisher pubIcpKeyFrames;ros::Publisher pubRecentKeyFrames;ros::Publisher pubRecentKeyFrame;ros::Publisher pubCloudRegisteredRaw;ros::Publisher pubLoopConstraintEdge;ros::Subscriber subCloud;ros::Subscriber subGPS;ros::Subscriber subLoop;ros::ServiceServer srvSaveMap;std::deque gpsQueue;lio_sam::cloud_info cloudInfo;// 历史所有关键帧的角点集合(降采样)vector::Ptr> cornerCloudKeyFrames;// 历史所有关键帧的平面点集合(降采样)vector::Ptr> surfCloudKeyFrames;// 历史关键帧位姿(位置)pcl::PointCloud::Ptr cloudKeyPoses3D;// 历史关键帧位姿pcl::PointCloud::Ptr cloudKeyPoses6D;pcl::PointCloud::Ptr copy_cloudKeyPoses3D;pcl::PointCloud::Ptr copy_cloudKeyPoses6D;// 当前激光帧角点集合pcl::PointCloud::Ptr laserCloudCornerLast; // 当前激光帧平面点集合pcl::PointCloud::Ptr laserCloudSurfLast; // 当前激光帧角点集合,降采样,DS: DownSizepcl::PointCloud::Ptr laserCloudCornerLastDS; // 当前激光帧平面点集合,降采样pcl::PointCloud::Ptr laserCloudSurfLastDS; // 当前帧与局部map匹配上了的角点、平面点,加入同一集合;后面是对应点的参数pcl::PointCloud::Ptr laserCloudOri;pcl::PointCloud::Ptr coeffSel;// 当前帧与局部map匹配上了的角点、参数、标记std::vector laserCloudOriCornerVec; std::vector coeffSelCornerVec;std::vector laserCloudOriCornerFlag;// 当前帧与局部map匹配上了的平面点、参数、标记std::vector laserCloudOriSurfVec; std::vector coeffSelSurfVec;std::vector laserCloudOriSurfFlag;map, pcl::PointCloud>> laserCloudMapContainer;// 局部map的角点集合pcl::PointCloud::Ptr laserCloudCornerFromMap;// 局部map的平面点集合pcl::PointCloud::Ptr laserCloudSurfFromMap;// 局部map的角点集合,降采样pcl::PointCloud::Ptr laserCloudCornerFromMapDS;// 局部map的平面点集合,降采样pcl::PointCloud::Ptr laserCloudSurfFromMapDS;// 局部关键帧构建的map点云,对应kdtree,用于scan-to-map找相邻点pcl::KdTreeFLANN::Ptr kdtreeCornerFromMap;pcl::KdTreeFLANN::Ptr kdtreeSurfFromMap;pcl::KdTreeFLANN::Ptr kdtreeSurroundingKeyPoses;pcl::KdTreeFLANN::Ptr kdtreeHistoryKeyPoses;// 降采样pcl::VoxelGrid downSizeFilterCorner;pcl::VoxelGrid downSizeFilterSurf;pcl::VoxelGrid downSizeFilterICP;pcl::VoxelGrid downSizeFilterSurroundingKeyPoses; // for surrounding key poses of scan-to-map optimizationros::Time timeLaserInfoStamp;double timeLaserInfoCur;float transformTobeMapped[6];std::mutex mtx;std::mutex mtxLoopInfo;bool isDegenerate = false;cv::Mat matP;// 局部map角点数量int laserCloudCornerFromMapDSNum = 0;// 局部map平面点数量int laserCloudSurfFromMapDSNum = 0;// 当前激光帧角点数量int laserCloudCornerLastDSNum = 0; // 当前激光帧面点数量int laserCloudSurfLastDSNum = 0;bool aLoopIsClosed = false;map loopIndexContainer; // from new to oldvector> loopIndexQueue;vector loopPoseQueue;vector loopNoiseQueue;deque loopInfoVec;nav_msgs::Path globalPath;// 当前帧位姿Eigen::Affine3f transPointAssociateToMap;// 前一帧位姿Eigen::Affine3f incrementalOdometryAffineFront;// 当前帧位姿Eigen::Affine3f incrementalOdometryAffineBack;/*** 构造函数*/mapOptimization(){// ISM2参数ISAM2Params parameters;parameters.relinearizeThreshold = 0.1;parameters.relinearizeSkip = 1;isam = new ISAM2(parameters);// 发布历史关键帧里程计pubKeyPoses = nh.advertise("lio_sam/mapping/trajectory", 1);// 发布局部关键帧map的特征点云pubLaserCloudSurround = nh.advertise("lio_sam/mapping/map_global", 1);// 发布激光里程计,rviz中表现为坐标轴pubLaserOdometryGlobal = nh.advertise ("lio_sam/mapping/odometry", 1);// 发布激光里程计,它与上面的激光里程计基本一样,只是roll、pitch用imu数据加权平均了一下,z做了限制pubLaserOdometryIncremental = nh.advertise ("lio_sam/mapping/odometry_incremental", 1);// 发布激光里程计路径,rviz中表现为载体的运行轨迹pubPath = nh.advertise("lio_sam/mapping/path", 1);// 订阅当前激光帧点云信息,来自featureExtractionsubCloud = nh.subscribe("lio_sam/feature/cloud_info", 1, &mapOptimization::laserCloudInfoHandler, this, ros::TransportHints().tcpNoDelay());// 订阅GPS里程计subGPS = nh.subscribe (gpsTopic, 200, &mapOptimization::gpsHandler, this, ros::TransportHints().tcpNoDelay());// 订阅来自外部闭环检测程序提供的闭环数据,本程序没有提供,这里实际没用上subLoop = nh.subscribe("lio_loop/loop_closure_detection", 1, &mapOptimization::loopInfoHandler, this, ros::TransportHints().tcpNoDelay());// 发布地图保存服务srvSaveMap = nh.advertiseService("lio_sam/save_map", &mapOptimization::saveMapService, this);// 发布闭环匹配关键帧局部mappubHistoryKeyFrames = nh.advertise("lio_sam/mapping/icp_loop_closure_history_cloud", 1);// 发布当前关键帧经过闭环优化后的位姿变换之后的特征点云pubIcpKeyFrames = nh.advertise("lio_sam/mapping/icp_loop_closure_corrected_cloud", 1);// 发布闭环边,rviz中表现为闭环帧之间的连线pubLoopConstraintEdge = nh.advertise("/lio_sam/mapping/loop_closure_constraints", 1);// 发布局部map的降采样平面点集合pubRecentKeyFrames = nh.advertise("lio_sam/mapping/map_local", 1);// 发布历史帧(累加的)的角点、平面点降采样集合pubRecentKeyFrame = nh.advertise("lio_sam/mapping/cloud_registered", 1);// 发布当前帧原始点云配准之后的点云pubCloudRegisteredRaw = nh.advertise("lio_sam/mapping/cloud_registered_raw", 1);downSizeFilterCorner.setLeafSize(mappingCornerLeafSize, mappingCornerLeafSize, mappingCornerLeafSize);downSizeFilterSurf.setLeafSize(mappingSurfLeafSize, mappingSurfLeafSize, mappingSurfLeafSize);downSizeFilterICP.setLeafSize(mappingSurfLeafSize, mappingSurfLeafSize, mappingSurfLeafSize);downSizeFilterSurroundingKeyPoses.setLeafSize(surroundingKeyframeDensity, surroundingKeyframeDensity, surroundingKeyframeDensity); // for surrounding key poses of scan-to-map optimizationallocateMemory();}/*** 初始化*/void allocateMemory(){cloudKeyPoses3D.reset(new pcl::PointCloud());cloudKeyPoses6D.reset(new pcl::PointCloud());copy_cloudKeyPoses3D.reset(new pcl::PointCloud());copy_cloudKeyPoses6D.reset(new pcl::PointCloud());kdtreeSurroundingKeyPoses.reset(new pcl::KdTreeFLANN());kdtreeHistoryKeyPoses.reset(new pcl::KdTreeFLANN());laserCloudCornerLast.reset(new pcl::PointCloud()); // corner feature set from odoOptimizationlaserCloudSurfLast.reset(new pcl::PointCloud()); // surf feature set from odoOptimizationlaserCloudCornerLastDS.reset(new pcl::PointCloud()); // downsampled corner featuer set from odoOptimizationlaserCloudSurfLastDS.reset(new pcl::PointCloud()); // downsampled surf featuer set from odoOptimizationlaserCloudOri.reset(new pcl::PointCloud());coeffSel.reset(new pcl::PointCloud());laserCloudOriCornerVec.resize(N_SCAN * Horizon_SCAN);coeffSelCornerVec.resize(N_SCAN * Horizon_SCAN);laserCloudOriCornerFlag.resize(N_SCAN * Horizon_SCAN);laserCloudOriSurfVec.resize(N_SCAN * Horizon_SCAN);coeffSelSurfVec.resize(N_SCAN * Horizon_SCAN);laserCloudOriSurfFlag.resize(N_SCAN * Horizon_SCAN);std::fill(laserCloudOriCornerFlag.begin(), laserCloudOriCornerFlag.end(), false);std::fill(laserCloudOriSurfFlag.begin(), laserCloudOriSurfFlag.end(), false);laserCloudCornerFromMap.reset(new pcl::PointCloud());laserCloudSurfFromMap.reset(new pcl::PointCloud());laserCloudCornerFromMapDS.reset(new pcl::PointCloud());laserCloudSurfFromMapDS.reset(new pcl::PointCloud());kdtreeCornerFromMap.reset(new pcl::KdTreeFLANN());kdtreeSurfFromMap.reset(new pcl::KdTreeFLANN());for (int i = 0; i < 6; ++i){transformTobeMapped[i] = 0;}matP = cv::Mat(6, 6, CV_32F, cv::Scalar::all(0));}/*** 订阅当前激光帧点云信息,来自featureExtraction* 1、当前帧位姿初始化* 1) 如果是第一帧,用原始imu数据的RPY初始化当前帧位姿(旋转部分)* 2) 后续帧,用imu里程计计算两帧之间的增量位姿变换,作用于前一帧的激光位姿,得到当前帧激光位姿* 2、提取局部角点、平面点云集合,加入局部map* 1) 对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下* 2) 对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中* 3、当前激光帧角点、平面点集合降采样* 4、scan-to-map优化当前帧位姿* (1) 要求当前帧特征点数量足够多,且匹配的点数够多,才执行优化* (2) 迭代30次(上限)优化* 1) 当前激光帧角点寻找局部map匹配点* a.更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了* b.计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数* 2) 当前激光帧平面点寻找局部map匹配点* a.更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了* b.计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数* 3) 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合* 4) 对匹配特征点计算Jacobian矩阵,观测值为特征点到直线、平面的距离,构建高斯牛顿方程,迭代优化当前位姿,存transformTobeMapped* (3)用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标* 5、设置当前帧为关键帧并执行因子图优化* 1) 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧* 2) 添加激光里程计因子、GPS因子、闭环因子* 3) 执行因子图优化* 4) 得到当前帧优化后位姿,位姿协方差* 5) 添加cloudKeyPoses3D,cloudKeyPoses6D,更新transformTobeMapped,添加当前关键帧的角点、平面点集合* 6、更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿,更新里程计轨迹* 7、发布激光里程计* 8、发布里程计、点云、轨迹*/void laserCloudInfoHandler(const lio_sam::cloud_infoConstPtr& msgIn){// 当前激光帧时间戳timeLaserInfoStamp = msgIn->header.stamp;timeLaserInfoCur = msgIn->header.stamp.toSec();// 提取当前激光帧角点、平面点集合cloudInfo = *msgIn;pcl::fromROSMsg(msgIn->cloud_corner, *laserCloudCornerLast);pcl::fromROSMsg(msgIn->cloud_surface, *laserCloudSurfLast);std::lock_guard lock(mtx);// mapping执行频率控制static double timeLastProcessing = -1;if (timeLaserInfoCur - timeLastProcessing >= mappingProcessInterval){timeLastProcessing = timeLaserInfoCur;// 当前帧位姿初始化// 1、如果是第一帧,用原始imu数据的RPY初始化当前帧位姿(旋转部分)// 2、后续帧,用imu里程计计算两帧之间的增量位姿变换,作用于前一帧的激光位姿,得到当前帧激光位姿updateInitialGuess();// 提取局部角点、平面点云集合,加入局部map// 1、对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下// 2、对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中extractSurroundingKeyFrames();// 当前激光帧角点、平面点集合降采样downsampleCurrentScan();// scan-to-map优化当前帧位姿// 1、要求当前帧特征点数量足够多,且匹配的点数够多,才执行优化// 2、迭代30次(上限)优化// 1) 当前激光帧角点寻找局部map匹配点// a.更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了// b.计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数// 2) 当前激光帧平面点寻找局部map匹配点// a.更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了// b.计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数// 3) 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合// 4) 对匹配特征点计算Jacobian矩阵,观测值为特征点到直线、平面的距离,构建高斯牛顿方程,迭代优化当前位姿,存transformTobeMapped// 3、用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标scan2MapOptimization();// 设置当前帧为关键帧并执行因子图优化// 1、计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧// 2、添加激光里程计因子、GPS因子、闭环因子// 3、执行因子图优化// 4、得到当前帧优化后位姿,位姿协方差// 5、添加cloudKeyPoses3D,cloudKeyPoses6D,更新transformTobeMapped,添加当前关键帧的角点、平面点集合saveKeyFramesAndFactor();// 更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿,更新里程计轨迹correctPoses();// 发布激光里程计publishOdometry();// 发布里程计、点云、轨迹// 1、发布历史关键帧位姿集合// 2、发布局部map的降采样平面点集合// 3、发布历史帧(累加的)的角点、平面点降采样集合// 4、发布里程计轨迹publishFrames();}}/*** 订阅GPS里程计,添加到GPS里程计队列*/void gpsHandler(const nav_msgs::Odometry::ConstPtr& gpsMsg){gpsQueue.push_back(*gpsMsg);}/*** 激光坐标系下的激光点,通过激光帧位姿,变换到世界坐标系下*/void pointAssociateToMap(PointType const * const pi, PointType * const po){po->x = transPointAssociateToMap(0,0) * pi->x + transPointAssociateToMap(0,1) * pi->y + transPointAssociateToMap(0,2) * pi->z + transPointAssociateToMap(0,3);po->y = transPointAssociateToMap(1,0) * pi->x + transPointAssociateToMap(1,1) * pi->y + transPointAssociateToMap(1,2) * pi->z + transPointAssociateToMap(1,3);po->z = transPointAssociateToMap(2,0) * pi->x + transPointAssociateToMap(2,1) * pi->y + transPointAssociateToMap(2,2) * pi->z + transPointAssociateToMap(2,3);po->intensity = pi->intensity;}/*** 对点云cloudIn进行变换transformIn,返回结果点云*/pcl::PointCloud::Ptr transformPointCloud(pcl::PointCloud::Ptr cloudIn, PointTypePose* transformIn){pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());int cloudSize = cloudIn->size();cloudOut->resize(cloudSize);Eigen::Affine3f transCur = pcl::getTransformation(transformIn->x, transformIn->y, transformIn->z, transformIn->roll, transformIn->pitch, transformIn->yaw);#pragma omp parallel for num_threads(numberOfCores)for (int i = 0; i < cloudSize; ++i){const auto &pointFrom = cloudIn->points[i];cloudOut->points[i].x = transCur(0,0) * pointFrom.x + transCur(0,1) * pointFrom.y + transCur(0,2) * pointFrom.z + transCur(0,3);cloudOut->points[i].y = transCur(1,0) * pointFrom.x + transCur(1,1) * pointFrom.y + transCur(1,2) * pointFrom.z + transCur(1,3);cloudOut->points[i].z = transCur(2,0) * pointFrom.x + transCur(2,1) * pointFrom.y + transCur(2,2) * pointFrom.z + transCur(2,3);cloudOut->points[i].intensity = pointFrom.intensity;}return cloudOut;}/*** 位姿格式变换*/gtsam::Pose3 pclPointTogtsamPose3(PointTypePose thisPoint){return gtsam::Pose3(gtsam::Rot3::RzRyRx(double(thisPoint.roll), double(thisPoint.pitch), double(thisPoint.yaw)),gtsam::Point3(double(thisPoint.x), double(thisPoint.y), double(thisPoint.z)));}/*** 位姿格式变换*/gtsam::Pose3 trans2gtsamPose(float transformIn[]){return gtsam::Pose3(gtsam::Rot3::RzRyRx(transformIn[0], transformIn[1], transformIn[2]), gtsam::Point3(transformIn[3], transformIn[4], transformIn[5]));}/*** Eigen格式的位姿变换*/Eigen::Affine3f pclPointToAffine3f(PointTypePose thisPoint){ return pcl::getTransformation(thisPoint.x, thisPoint.y, thisPoint.z, thisPoint.roll, thisPoint.pitch, thisPoint.yaw);}/*** Eigen格式的位姿变换*/Eigen::Affine3f trans2Affine3f(float transformIn[]){return pcl::getTransformation(transformIn[3], transformIn[4], transformIn[5], transformIn[0], transformIn[1], transformIn[2]);}/*** 位姿格式变换*/PointTypePose trans2PointTypePose(float transformIn[]){PointTypePose thisPose6D;thisPose6D.x = transformIn[3];thisPose6D.y = transformIn[4];thisPose6D.z = transformIn[5];thisPose6D.roll = transformIn[0];thisPose6D.pitch = transformIn[1];thisPose6D.yaw = transformIn[2];return thisPose6D;}/*** 保存全局关键帧特征点集合*/bool saveMapService(lio_sam::save_mapRequest& req, lio_sam::save_mapResponse& res){string saveMapDirectory;cout << "****************************************************" << endl;cout << "Saving map to pcd files ..." << endl;if(req.destination.empty()) saveMapDirectory = std::getenv("HOME") + savePCDDirectory;else saveMapDirectory = std::getenv("HOME") + req.destination;cout << "Save destination: " << saveMapDirectory << endl;// 这个代码太坑了!!注释掉// int unused = system((std::string("exec rm -r ") + saveMapDirectory).c_str());// unused = system((std::string("mkdir -p ") + saveMapDirectory).c_str());// 保存历史关键帧位姿pcl::io::savePCDFileBinary(saveMapDirectory + "/trajectory.pcd", *cloudKeyPoses3D);pcl::io::savePCDFileBinary(saveMapDirectory + "/transformations.pcd", *cloudKeyPoses6D);// 提取历史关键帧角点、平面点集合pcl::PointCloud::Ptr globalCornerCloud(new pcl::PointCloud());pcl::PointCloud::Ptr globalCornerCloudDS(new pcl::PointCloud());pcl::PointCloud::Ptr globalSurfCloud(new pcl::PointCloud());pcl::PointCloud::Ptr globalSurfCloudDS(new pcl::PointCloud());pcl::PointCloud::Ptr globalMapCloud(new pcl::PointCloud());for (int i = 0; i < (int)cloudKeyPoses3D->size(); i++) {*globalCornerCloud += *transformPointCloud(cornerCloudKeyFrames[i], &cloudKeyPoses6D->points[i]);*globalSurfCloud += *transformPointCloud(surfCloudKeyFrames[i], &cloudKeyPoses6D->points[i]);cout << "\r" << std::flush << "Processing feature cloud " << i << " of " << cloudKeyPoses6D->size() << " ...";}if(req.resolution != 0){cout << "\n\nSave resolution: " << req.resolution << endl;// 降采样downSizeFilterCorner.setInputCloud(globalCornerCloud);downSizeFilterCorner.setLeafSize(req.resolution, req.resolution, req.resolution);downSizeFilterCorner.filter(*globalCornerCloudDS);pcl::io::savePCDFileBinary(saveMapDirectory + "/CornerMap.pcd", *globalCornerCloudDS);// 降采样downSizeFilterSurf.setInputCloud(globalSurfCloud);downSizeFilterSurf.setLeafSize(req.resolution, req.resolution, req.resolution);downSizeFilterSurf.filter(*globalSurfCloudDS);pcl::io::savePCDFileBinary(saveMapDirectory + "/SurfMap.pcd", *globalSurfCloudDS);}else{pcl::io::savePCDFileBinary(saveMapDirectory + "/CornerMap.pcd", *globalCornerCloud);pcl::io::savePCDFileBinary(saveMapDirectory + "/SurfMap.pcd", *globalSurfCloud);}// 保存到一起,全局关键帧特征点集合*globalMapCloud += *globalCornerCloud;*globalMapCloud += *globalSurfCloud;int ret = pcl::io::savePCDFileBinary(saveMapDirectory + "/GlobalMap.pcd", *globalMapCloud);res.success = ret == 0;downSizeFilterCorner.setLeafSize(mappingCornerLeafSize, mappingCornerLeafSize, mappingCornerLeafSize);downSizeFilterSurf.setLeafSize(mappingSurfLeafSize, mappingSurfLeafSize, mappingSurfLeafSize);cout << "****************************************************" << endl;cout << "Saving map to pcd files completed\n" << endl;return true;}/*** 展示线程* 1、发布局部关键帧map的特征点云* 2、保存全局关键帧特征点集合*/void visualizeGlobalMapThread(){ros::Rate rate(0.2);while (ros::ok()){rate.sleep();// 发布局部关键帧map的特征点云publishGlobalMap();}if (savePCD == false)return;lio_sam::save_mapRequest req;lio_sam::save_mapResponse res;// 保存全局关键帧特征点集合if(!saveMapService(req, res)){cout << "Fail to save map" << endl;}}/*** 发布局部关键帧map的特征点云*/void publishGlobalMap(){if (pubLaserCloudSurround.getNumSubscribers() == 0)return;if (cloudKeyPoses3D->points.empty() == true)return;pcl::KdTreeFLANN::Ptr kdtreeGlobalMap(new pcl::KdTreeFLANN());;pcl::PointCloud::Ptr globalMapKeyPoses(new pcl::PointCloud());pcl::PointCloud::Ptr globalMapKeyPosesDS(new pcl::PointCloud());pcl::PointCloud::Ptr globalMapKeyFrames(new pcl::PointCloud());pcl::PointCloud::Ptr globalMapKeyFramesDS(new pcl::PointCloud());// kdtree查找最近一帧关键帧相邻的关键帧集合std::vector pointSearchIndGlobalMap;std::vector pointSearchSqDisGlobalMap;mtx.lock();kdtreeGlobalMap->setInputCloud(cloudKeyPoses3D);kdtreeGlobalMap->radiusSearch(cloudKeyPoses3D->back(), globalMapVisualizationSearchRadius, pointSearchIndGlobalMap, pointSearchSqDisGlobalMap, 0);mtx.unlock();for (int i = 0; i < (int)pointSearchIndGlobalMap.size(); ++i)globalMapKeyPoses->push_back(cloudKeyPoses3D->points[pointSearchIndGlobalMap[i]]);// 降采样pcl::VoxelGrid downSizeFilterGlobalMapKeyPoses;downSizeFilterGlobalMapKeyPoses.setLeafSize(globalMapVisualizationPoseDensity, globalMapVisualizationPoseDensity, globalMapVisualizationPoseDensity); // for global map visualizationdownSizeFilterGlobalMapKeyPoses.setInputCloud(globalMapKeyPoses);downSizeFilterGlobalMapKeyPoses.filter(*globalMapKeyPosesDS);// 提取局部相邻关键帧对应的特征点云for (int i = 0; i < (int)globalMapKeyPosesDS->size(); ++i){// 距离过大if (pointDistance(globalMapKeyPosesDS->points[i], cloudKeyPoses3D->back()) > globalMapVisualizationSearchRadius)continue;int thisKeyInd = (int)globalMapKeyPosesDS->points[i].intensity;*globalMapKeyFrames += *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);*globalMapKeyFrames += *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);}// 降采样,发布pcl::VoxelGrid downSizeFilterGlobalMapKeyFrames; // for global map visualizationdownSizeFilterGlobalMapKeyFrames.setLeafSize(globalMapVisualizationLeafSize, globalMapVisualizationLeafSize, globalMapVisualizationLeafSize); // for global map visualizationdownSizeFilterGlobalMapKeyFrames.setInputCloud(globalMapKeyFrames);downSizeFilterGlobalMapKeyFrames.filter(*globalMapKeyFramesDS);publishCloud(&pubLaserCloudSurround, globalMapKeyFramesDS, timeLaserInfoStamp, odometryFrame);}/*** 闭环线程* 1、闭环scan-to-map,icp优化位姿* 1) 在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧* 2) 提取当前关键帧特征点集合,降采样;提取闭环匹配关键帧前后相邻若干帧的关键帧特征点集合,降采样* 3) 执行scan-to-map优化,调用icp方法,得到优化后位姿,构造闭环因子需要的数据,在因子图优化中一并加入更新位姿* 2、rviz展示闭环边*/void loopClosureThread(){if (loopClosureEnableFlag == false)return;ros::Rate rate(loopClosureFrequency);while (ros::ok()){rate.sleep();// 闭环scan-to-map,icp优化位姿// 1、在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧// 2、提取当前关键帧特征点集合,降采样;提取闭环匹配关键帧前后相邻若干帧的关键帧特征点集合,降采样// 3、执行scan-to-map优化,调用icp方法,得到优化后位姿,构造闭环因子需要的数据,在因子图优化中一并加入更新位姿// 注:闭环的时候没有立即更新当前帧的位姿,而是添加闭环因子,让图优化去更新位姿performLoopClosure();// rviz展示闭环边visualizeLoopClosure();}}/*** 订阅来自外部闭环检测程序提供的闭环数据,本程序没有提供,这里实际没用上*/void loopInfoHandler(const std_msgs::Float64MultiArray::ConstPtr& loopMsg){std::lock_guard lock(mtxLoopInfo);if (loopMsg->data.size() != 2)return;loopInfoVec.push_back(*loopMsg);while (loopInfoVec.size() > 5)loopInfoVec.pop_front();}/*** 闭环scan-to-map,icp优化位姿* 1、在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧* 2、提取当前关键帧特征点集合,降采样;提取闭环匹配关键帧前后相邻若干帧的关键帧特征点集合,降采样* 3、执行scan-to-map优化,调用icp方法,得到优化后位姿,构造闭环因子需要的数据,在因子图优化中一并加入更新位姿* 注:闭环的时候没有立即更新当前帧的位姿,而是添加闭环因子,让图优化去更新位姿*/void performLoopClosure(){if (cloudKeyPoses3D->points.empty() == true)return;mtx.lock();*copy_cloudKeyPoses3D = *cloudKeyPoses3D;*copy_cloudKeyPoses6D = *cloudKeyPoses6D;mtx.unlock();// 当前关键帧索引,候选闭环匹配帧索引int loopKeyCur;int loopKeyPre;// not-usedif (detectLoopClosureExternal(&loopKeyCur, &loopKeyPre) == false)// 在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧if (detectLoopClosureDistance(&loopKeyCur, &loopKeyPre) == false)return;// 提取pcl::PointCloud::Ptr cureKeyframeCloud(new pcl::PointCloud());pcl::PointCloud::Ptr prevKeyframeCloud(new pcl::PointCloud());{// 提取当前关键帧特征点集合,降采样loopFindNearKeyframes(cureKeyframeCloud, loopKeyCur, 0);// 提取闭环匹配关键帧前后相邻若干帧的关键帧特征点集合,降采样loopFindNearKeyframes(prevKeyframeCloud, loopKeyPre, historyKeyframeSearchNum);// 如果特征点较少,返回if (cureKeyframeCloud->size() < 300 || prevKeyframeCloud->size() < 1000)return;// 发布闭环匹配关键帧局部mapif (pubHistoryKeyFrames.getNumSubscribers() != 0)publishCloud(&pubHistoryKeyFrames, prevKeyframeCloud, timeLaserInfoStamp, odometryFrame);}// ICP参数设置static pcl::IterativeClosestPoint icp;icp.setMaxCorrespondenceDistance(historyKeyframeSearchRadius*2);icp.setMaximumIterations(100);icp.setTransformationEpsilon(1e-6);icp.setEuclideanFitnessEpsilon(1e-6);icp.setRANSACIterations(0);// scan-to-map,调用icp匹配icp.setInputSource(cureKeyframeCloud);icp.setInputTarget(prevKeyframeCloud);pcl::PointCloud::Ptr unused_result(new pcl::PointCloud());icp.align(*unused_result);// 未收敛,或者匹配不够好if (icp.hasConverged() == false || icp.getFitnessScore() > historyKeyframeFitnessScore)return;// 发布当前关键帧经过闭环优化后的位姿变换之后的特征点云if (pubIcpKeyFrames.getNumSubscribers() != 0){pcl::PointCloud::Ptr closed_cloud(new pcl::PointCloud());pcl::transformPointCloud(*cureKeyframeCloud, *closed_cloud, icp.getFinalTransformation());publishCloud(&pubIcpKeyFrames, closed_cloud, timeLaserInfoStamp, odometryFrame);}// 闭环优化得到的当前关键帧与闭环关键帧之间的位姿变换float x, y, z, roll, pitch, yaw;Eigen::Affine3f correctionLidarFrame;correctionLidarFrame = icp.getFinalTransformation();// 闭环优化前当前帧位姿Eigen::Affine3f tWrong = pclPointToAffine3f(copy_cloudKeyPoses6D->points[loopKeyCur]);// 闭环优化后当前帧位姿Eigen::Affine3f tCorrect = correctionLidarFrame * tWrong;pcl::getTranslationAndEulerAngles (tCorrect, x, y, z, roll, pitch, yaw);gtsam::Pose3 poseFrom = Pose3(Rot3::RzRyRx(roll, pitch, yaw), Point3(x, y, z));// 闭环匹配帧的位姿gtsam::Pose3 poseTo = pclPointTogtsamPose3(copy_cloudKeyPoses6D->points[loopKeyPre]);gtsam::Vector Vector6(6);float noiseScore = icp.getFitnessScore();Vector6 << noiseScore, noiseScore, noiseScore, noiseScore, noiseScore, noiseScore;noiseModel::Diagonal::shared_ptr constraintNoise = noiseModel::Diagonal::Variances(Vector6);// 添加闭环因子需要的数据mtx.lock();loopIndexQueue.push_back(make_pair(loopKeyCur, loopKeyPre));loopPoseQueue.push_back(poseFrom.between(poseTo));loopNoiseQueue.push_back(constraintNoise);mtx.unlock();loopIndexContainer[loopKeyCur] = loopKeyPre;}/*** 在历史关键帧中查找与当前关键帧距离最近的关键帧集合,选择时间相隔较远的一帧作为候选闭环帧*/bool detectLoopClosureDistance(int *latestID, int *closestID){// 当前关键帧帧int loopKeyCur = copy_cloudKeyPoses3D->size() - 1;int loopKeyPre = -1;// 当前帧已经添加过闭环对应关系,不再继续添加auto it = loopIndexContainer.find(loopKeyCur);if (it != loopIndexContainer.end())return false;// 在历史关键帧中查找与当前关键帧距离最近的关键帧集合std::vector pointSearchIndLoop;std::vector pointSearchSqDisLoop;kdtreeHistoryKeyPoses->setInputCloud(copy_cloudKeyPoses3D);kdtreeHistoryKeyPoses->radiusSearch(copy_cloudKeyPoses3D->back(), historyKeyframeSearchRadius, pointSearchIndLoop, pointSearchSqDisLoop, 0);// 在候选关键帧集合中,找到与当前帧时间相隔较远的帧,设为候选匹配帧for (int i = 0; i < (int)pointSearchIndLoop.size(); ++i){int id = pointSearchIndLoop[i];if (abs(copy_cloudKeyPoses6D->points[id].time - timeLaserInfoCur) > historyKeyframeSearchTimeDiff){loopKeyPre = id;break;}}if (loopKeyPre == -1 || loopKeyCur == loopKeyPre)return false;*latestID = loopKeyCur;*closestID = loopKeyPre;return true;}/*** not-used, 来自外部闭环检测程序提供的闭环匹配索引对*/bool detectLoopClosureExternal(int *latestID, int *closestID){// this function is not used yet, please ignore itint loopKeyCur = -1;int loopKeyPre = -1;std::lock_guard lock(mtxLoopInfo);if (loopInfoVec.empty())return false;double loopTimeCur = loopInfoVec.front().data[0];double loopTimePre = loopInfoVec.front().data[1];loopInfoVec.pop_front();if (abs(loopTimeCur - loopTimePre) < historyKeyframeSearchTimeDiff)return false;int cloudSize = copy_cloudKeyPoses6D->size();if (cloudSize < 2)return false;// latest keyloopKeyCur = cloudSize - 1;for (int i = cloudSize - 1; i >= 0; --i){if (copy_cloudKeyPoses6D->points[i].time >= loopTimeCur)loopKeyCur = round(copy_cloudKeyPoses6D->points[i].intensity);elsebreak;}// previous keyloopKeyPre = 0;for (int i = 0; i < cloudSize; ++i){if (copy_cloudKeyPoses6D->points[i].time <= loopTimePre)loopKeyPre = round(copy_cloudKeyPoses6D->points[i].intensity);elsebreak;}if (loopKeyCur == loopKeyPre)return false;auto it = loopIndexContainer.find(loopKeyCur);if (it != loopIndexContainer.end())return false;*latestID = loopKeyCur;*closestID = loopKeyPre;return true;}/*** 提取key索引的关键帧前后相邻若干帧的关键帧特征点集合,降采样*/void loopFindNearKeyframes(pcl::PointCloud::Ptr& nearKeyframes, const int& key, const int& searchNum){// 提取key索引的关键帧前后相邻若干帧的关键帧特征点集合nearKeyframes->clear();int cloudSize = copy_cloudKeyPoses6D->size();for (int i = -searchNum; i <= searchNum; ++i){int keyNear = key + i;if (keyNear < 0 || keyNear >= cloudSize )continue;*nearKeyframes += *transformPointCloud(cornerCloudKeyFrames[keyNear], ©_cloudKeyPoses6D->points[keyNear]);*nearKeyframes += *transformPointCloud(surfCloudKeyFrames[keyNear], ©_cloudKeyPoses6D->points[keyNear]);}if (nearKeyframes->empty())return;// 降采样pcl::PointCloud::Ptr cloud_temp(new pcl::PointCloud());downSizeFilterICP.setInputCloud(nearKeyframes);downSizeFilterICP.filter(*cloud_temp);*nearKeyframes = *cloud_temp;}/*** rviz展示闭环边*/void visualizeLoopClosure(){if (loopIndexContainer.empty())return;visualization_msgs::MarkerArray markerArray;// 闭环顶点visualization_msgs::Marker markerNode;markerNode.header.frame_id = odometryFrame;markerNode.header.stamp = timeLaserInfoStamp;markerNode.action = visualization_msgs::Marker::ADD;markerNode.type = visualization_msgs::Marker::SPHERE_LIST;markerNode.ns = "loop_nodes";markerNode.id = 0;markerNode.pose.orientation.w = 1;markerNode.scale.x = 0.3; markerNode.scale.y = 0.3; markerNode.scale.z = 0.3; markerNode.color.r = 0; markerNode.color.g = 0.8; markerNode.color.b = 1;markerNode.color.a = 1;// 闭环边visualization_msgs::Marker markerEdge;markerEdge.header.frame_id = odometryFrame;markerEdge.header.stamp = timeLaserInfoStamp;markerEdge.action = visualization_msgs::Marker::ADD;markerEdge.type = visualization_msgs::Marker::LINE_LIST;markerEdge.ns = "loop_edges";markerEdge.id = 1;markerEdge.pose.orientation.w = 1;markerEdge.scale.x = 0.1;markerEdge.color.r = 0.9; markerEdge.color.g = 0.9; markerEdge.color.b = 0;markerEdge.color.a = 1;// 遍历闭环for (auto it = loopIndexContainer.begin(); it != loopIndexContainer.end(); ++it){int key_cur = it->first;int key_pre = it->second;geometry_msgs::Point p;p.x = copy_cloudKeyPoses6D->points[key_cur].x;p.y = copy_cloudKeyPoses6D->points[key_cur].y;p.z = copy_cloudKeyPoses6D->points[key_cur].z;markerNode.points.push_back(p);markerEdge.points.push_back(p);p.x = copy_cloudKeyPoses6D->points[key_pre].x;p.y = copy_cloudKeyPoses6D->points[key_pre].y;p.z = copy_cloudKeyPoses6D->points[key_pre].z;markerNode.points.push_back(p);markerEdge.points.push_back(p);}markerArray.markers.push_back(markerNode);markerArray.markers.push_back(markerEdge);pubLoopConstraintEdge.publish(markerArray);}/*** 当前帧位姿初始化* 1、如果是第一帧,用原始imu数据的RPY初始化当前帧位姿(旋转部分)* 2、后续帧,用imu里程计计算两帧之间的增量位姿变换,作用于前一帧的激光位姿,得到当前帧激光位姿*/void updateInitialGuess(){// 前一帧的位姿,注:这里指lidar的位姿,后面都简写成位姿incrementalOdometryAffineFront = trans2Affine3f(transformTobeMapped);// 前一帧的初始化姿态角(来自原始imu数据),用于估计第一帧的位姿(旋转部分)static Eigen::Affine3f lastImuTransformation;// 如果关键帧集合为空,继续进行初始化if (cloudKeyPoses3D->points.empty()){// 当前帧位姿的旋转部分,用激光帧信息中的RPY(来自imu原始数据)初始化transformTobeMapped[0] = cloudInfo.imuRollInit;transformTobeMapped[1] = cloudInfo.imuPitchInit;transformTobeMapped[2] = cloudInfo.imuYawInit;if (!useImuHeadingInitialization)transformTobeMapped[2] = 0;lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); return;}// 用当前帧和前一帧对应的imu里程计计算相对位姿变换,再用前一帧的位姿与相对变换,计算当前帧的位姿,存transformTobeMappedstatic bool lastImuPreTransAvailable = false;static Eigen::Affine3f lastImuPreTransformation;if (cloudInfo.odomAvailable == true){// 当前帧的初始估计位姿(来自imu里程计),后面用来计算增量位姿变换Eigen::Affine3f transBack = pcl::getTransformation(cloudInfo.initialGuessX, cloudInfo.initialGuessY, cloudInfo.initialGuessZ, cloudInfo.initialGuessRoll, cloudInfo.initialGuessPitch, cloudInfo.initialGuessYaw);if (lastImuPreTransAvailable == false){// 赋值给前一帧lastImuPreTransformation = transBack;lastImuPreTransAvailable = true;} else {// 当前帧相对于前一帧的位姿变换,imu里程计计算得到Eigen::Affine3f transIncre = lastImuPreTransformation.inverse() * transBack;// 前一帧的位姿Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);// 当前帧的位姿Eigen::Affine3f transFinal = transTobe * transIncre;// 更新当前帧位姿transformTobeMappedpcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5], transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);// 赋值给前一帧lastImuPreTransformation = transBack;lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;return;}}// 只在第一帧调用(注意上面的return),用imu数据初始化当前帧位姿,仅初始化旋转部分if (cloudInfo.imuAvailable == true){// 当前帧的姿态角(来自原始imu数据)Eigen::Affine3f transBack = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit);// 当前帧相对于前一帧的姿态变换Eigen::Affine3f transIncre = lastImuTransformation.inverse() * transBack;// 前一帧的位姿Eigen::Affine3f transTobe = trans2Affine3f(transformTobeMapped);// 当前帧的位姿Eigen::Affine3f transFinal = transTobe * transIncre;// 更新当前帧位姿transformTobeMappedpcl::getTranslationAndEulerAngles(transFinal, transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5], transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);lastImuTransformation = pcl::getTransformation(0, 0, 0, cloudInfo.imuRollInit, cloudInfo.imuPitchInit, cloudInfo.imuYawInit); // save imu before return;return;}}/*** not-used*/void extractForLoopClosure(){pcl::PointCloud::Ptr cloudToExtract(new pcl::PointCloud());// int numPoses = cloudKeyPoses3D->size();for (int i = numPoses-1; i >= 0; --i){if ((int)cloudToExtract->size() <= surroundingKeyframeSize)cloudToExtract->push_back(cloudKeyPoses3D->points[i]);elsebreak;}// 将相邻关键帧集合对应的角点、平面点,加入到局部map中,作为scan-to-map匹配的局部点云地图extractCloud(cloudToExtract);}/*** 提取局部角点、平面点云集合,加入局部map* 1、对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下* 2、对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中*/void extractNearby(){pcl::PointCloud::Ptr surroundingKeyPoses(new pcl::PointCloud());pcl::PointCloud::Ptr surroundingKeyPosesDS(new pcl::PointCloud());std::vector pointSearchInd;std::vector pointSearchSqDis;// kdtree的输入,全局关键帧位姿集合(历史所有关键帧集合)kdtreeSurroundingKeyPoses->setInputCloud(cloudKeyPoses3D); // 对最近的一帧关键帧,在半径区域内搜索空间区域上相邻的关键帧集合kdtreeSurroundingKeyPoses->radiusSearch(cloudKeyPoses3D->back(), (double)surroundingKeyframeSearchRadius, pointSearchInd, pointSearchSqDis);// 遍历搜索结果,pointSearchInd存的是结果在cloudKeyPoses3D下面的索引for (int i = 0; i < (int)pointSearchInd.size(); ++i){int id = pointSearchInd[i];// 加入相邻关键帧位姿集合中surroundingKeyPoses->push_back(cloudKeyPoses3D->points[id]);}// 降采样一下downSizeFilterSurroundingKeyPoses.setInputCloud(surroundingKeyPoses);downSizeFilterSurroundingKeyPoses.filter(*surroundingKeyPosesDS);// 加入时间上相邻的一些关键帧,比如当载体在原地转圈,这些帧加进来是合理的int numPoses = cloudKeyPoses3D->size();for (int i = numPoses-1; i >= 0; --i){if (timeLaserInfoCur - cloudKeyPoses6D->points[i].time < 10.0)surroundingKeyPosesDS->push_back(cloudKeyPoses3D->points[i]);elsebreak;}// 将相邻关键帧集合对应的角点、平面点,加入到局部map中,作为scan-to-map匹配的局部点云地图extractCloud(surroundingKeyPosesDS);}/*** 将相邻关键帧集合对应的角点、平面点,加入到局部map中,作为scan-to-map匹配的局部点云地图*/void extractCloud(pcl::PointCloud::Ptr cloudToExtract){// 相邻关键帧集合对应的角点、平面点,加入到局部map中;注:称之为局部map,后面进行scan-to-map匹配,所用到的map就是这里的相邻关键帧对应点云集合laserCloudCornerFromMap->clear();laserCloudSurfFromMap->clear(); // 遍历当前帧(实际是取最近的一个关键帧来找它相邻的关键帧集合)时空维度上相邻的关键帧集合for (int i = 0; i < (int)cloudToExtract->size(); ++i){// 距离超过阈值,丢弃if (pointDistance(cloudToExtract->points[i], cloudKeyPoses3D->back()) > surroundingKeyframeSearchRadius)continue;// 相邻关键帧索引int thisKeyInd = (int)cloudToExtract->points[i].intensity;if (laserCloudMapContainer.find(thisKeyInd) != laserCloudMapContainer.end()) {*laserCloudCornerFromMap += laserCloudMapContainer[thisKeyInd].first;*laserCloudSurfFromMap += laserCloudMapContainer[thisKeyInd].second;} else {// 相邻关键帧对应的角点、平面点云,通过6D位姿变换到世界坐标系下pcl::PointCloud laserCloudCornerTemp = *transformPointCloud(cornerCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);pcl::PointCloud laserCloudSurfTemp = *transformPointCloud(surfCloudKeyFrames[thisKeyInd], &cloudKeyPoses6D->points[thisKeyInd]);// 加入局部map*laserCloudCornerFromMap += laserCloudCornerTemp;*laserCloudSurfFromMap += laserCloudSurfTemp;laserCloudMapContainer[thisKeyInd] = make_pair(laserCloudCornerTemp, laserCloudSurfTemp);}}// 降采样局部角点mapdownSizeFilterCorner.setInputCloud(laserCloudCornerFromMap);downSizeFilterCorner.filter(*laserCloudCornerFromMapDS);laserCloudCornerFromMapDSNum = laserCloudCornerFromMapDS->size();// 降采样局部平面点mapdownSizeFilterSurf.setInputCloud(laserCloudSurfFromMap);downSizeFilterSurf.filter(*laserCloudSurfFromMapDS);laserCloudSurfFromMapDSNum = laserCloudSurfFromMapDS->size();// 太大了,清空一下内存if (laserCloudMapContainer.size() > 1000)laserCloudMapContainer.clear();}/*** 提取局部角点、平面点云集合,加入局部map* 1、对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下* 2、对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中*/void extractSurroundingKeyFrames(){if (cloudKeyPoses3D->points.empty() == true)return; // if (loopClosureEnableFlag == true)// {// extractForLoopClosure(); // } else {// extractNearby();// }// 提取局部角点、平面点云集合,加入局部map// 1、对最近的一帧关键帧,搜索时空维度上相邻的关键帧集合,降采样一下// 2、对关键帧集合中的每一帧,提取对应的角点、平面点,加入局部map中extractNearby();}/*** 当前激光帧角点、平面点集合降采样*/void downsampleCurrentScan(){// 当前激光帧角点集合降采样laserCloudCornerLastDS->clear();downSizeFilterCorner.setInputCloud(laserCloudCornerLast);downSizeFilterCorner.filter(*laserCloudCornerLastDS);laserCloudCornerLastDSNum = laserCloudCornerLastDS->size();// 当前激光帧平面点集合降采样laserCloudSurfLastDS->clear();downSizeFilterSurf.setInputCloud(laserCloudSurfLast);downSizeFilterSurf.filter(*laserCloudSurfLastDS);laserCloudSurfLastDSNum = laserCloudSurfLastDS->size();}/*** 更新当前帧位姿*/void updatePointAssociateToMap(){transPointAssociateToMap = trans2Affine3f(transformTobeMapped);}/*** 当前激光帧角点寻找局部map匹配点* 1、更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了* 2、计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数*/void cornerOptimization(){// 更新当前帧位姿updatePointAssociateToMap();// 遍历当前帧角点集合#pragma omp parallel for num_threads(numberOfCores)for (int i = 0; i < laserCloudCornerLastDSNum; i++){PointType pointOri, pointSel, coeff;std::vector pointSearchInd;std::vector pointSearchSqDis;// 角点(坐标还是lidar系)pointOri = laserCloudCornerLastDS->points[i];// 根据当前帧位姿,变换到世界坐标系(map系)下pointAssociateToMap(&pointOri, &pointSel);// 在局部角点map中查找当前角点相邻的5个角点kdtreeCornerFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);cv::Mat matA1(3, 3, CV_32F, cv::Scalar::all(0));cv::Mat matD1(1, 3, CV_32F, cv::Scalar::all(0));cv::Mat matV1(3, 3, CV_32F, cv::Scalar::all(0));// 要求距离都小于1mif (pointSearchSqDis[4] < 1.0) {// 计算5个点的均值坐标,记为中心点float cx = 0, cy = 0, cz = 0;for (int j = 0; j < 5; j++) {cx += laserCloudCornerFromMapDS->points[pointSearchInd[j]].x;cy += laserCloudCornerFromMapDS->points[pointSearchInd[j]].y;cz += laserCloudCornerFromMapDS->points[pointSearchInd[j]].z;}cx /= 5; cy /= 5; cz /= 5;// 计算协方差float a11 = 0, a12 = 0, a13 = 0, a22 = 0, a23 = 0, a33 = 0;for (int j = 0; j < 5; j++) {// 计算点与中心点之间的距离float ax = laserCloudCornerFromMapDS->points[pointSearchInd[j]].x - cx;float ay = laserCloudCornerFromMapDS->points[pointSearchInd[j]].y - cy;float az = laserCloudCornerFromMapDS->points[pointSearchInd[j]].z - cz;a11 += ax * ax; a12 += ax * ay; a13 += ax * az;a22 += ay * ay; a23 += ay * az;a33 += az * az;}a11 /= 5; a12 /= 5; a13 /= 5; a22 /= 5; a23 /= 5; a33 /= 5;// 构建协方差矩阵matA1.at(0, 0) = a11; matA1.at(0, 1) = a12; matA1.at(0, 2) = a13;matA1.at(1, 0) = a12; matA1.at(1, 1) = a22; matA1.at(1, 2) = a23;matA1.at(2, 0) = a13; matA1.at(2, 1) = a23; matA1.at(2, 2) = a33;// 特征值分解cv::eigen(matA1, matD1, matV1);// 如果最大的特征值相比次大特征值,大很多,认为构成了线,角点是合格的if (matD1.at(0, 0) > 3 * matD1.at(0, 1)) {// 当前帧角点坐标(map系下)float x0 = pointSel.x;float y0 = pointSel.y;float z0 = pointSel.z;// 局部map对应中心角点,沿着特征向量(直线方向)方向,前后各取一个点float x1 = cx + 0.1 * matV1.at(0, 0);float y1 = cy + 0.1 * matV1.at(0, 1);float z1 = cz + 0.1 * matV1.at(0, 2);float x2 = cx - 0.1 * matV1.at(0, 0);float y2 = cy - 0.1 * matV1.at(0, 1);float z2 = cz - 0.1 * matV1.at(0, 2);// area_012,也就是三个点组成的三角形面积*2,叉积的模|axb|=a*b*sin(theta)float a012 = sqrt(((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1)) * ((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1)) + ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1)) * ((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1)) + ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1)) * ((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1)));// line_12,底边边长float l12 = sqrt((x1 - x2)*(x1 - x2) + (y1 - y2)*(y1 - y2) + (z1 - z2)*(z1 - z2));// 两次叉积,得到点到直线的垂线段单位向量,x分量,下面同理float la = ((y1 - y2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1)) + (z1 - z2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1))) / a012 / l12;float lb = -((x1 - x2)*((x0 - x1)*(y0 - y2) - (x0 - x2)*(y0 - y1)) - (z1 - z2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;float lc = -((x1 - x2)*((x0 - x1)*(z0 - z2) - (x0 - x2)*(z0 - z1)) + (y1 - y2)*((y0 - y1)*(z0 - z2) - (y0 - y2)*(z0 - z1))) / a012 / l12;// 三角形的高,也就是点到直线距离float ld2 = a012 / l12;// 距离越大,s越小,是个距离惩罚因子(权重)float s = 1 - 0.9 * fabs(ld2);// 点到直线的垂线段单位向量coeff.x = s * la;coeff.y = s * lb;coeff.z = s * lc;// 点到直线距离coeff.intensity = s * ld2;if (s > 0.1) {// 当前激光帧角点,加入匹配集合中laserCloudOriCornerVec[i] = pointOri;// 角点的参数coeffSelCornerVec[i] = coeff;laserCloudOriCornerFlag[i] = true;}}}}}/*** 当前激光帧平面点寻找局部map匹配点* 1、更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了* 2、计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数*/void surfOptimization(){// 更新当前帧位姿updatePointAssociateToMap();// 遍历当前帧平面点集合#pragma omp parallel for num_threads(numberOfCores)for (int i = 0; i < laserCloudSurfLastDSNum; i++){PointType pointOri, pointSel, coeff;std::vector pointSearchInd;std::vector pointSearchSqDis;// 平面点(坐标还是lidar系)pointOri = laserCloudSurfLastDS->points[i];// 根据当前帧位姿,变换到世界坐标系(map系)下pointAssociateToMap(&pointOri, &pointSel); // 在局部平面点map中查找当前平面点相邻的5个平面点kdtreeSurfFromMap->nearestKSearch(pointSel, 5, pointSearchInd, pointSearchSqDis);Eigen::Matrix matA0;Eigen::Matrix matB0;Eigen::Vector3f matX0;matA0.setZero();matB0.fill(-1);matX0.setZero();// 要求距离都小于1mif (pointSearchSqDis[4] < 1.0) {for (int j = 0; j < 5; j++) {matA0(j, 0) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].x;matA0(j, 1) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].y;matA0(j, 2) = laserCloudSurfFromMapDS->points[pointSearchInd[j]].z;}// 假设平面方程为ax+by+cz+1=0,这里就是求方程的系数abc,d=1matX0 = matA0.colPivHouseholderQr().solve(matB0);// 平面方程的系数,也是法向量的分量float pa = matX0(0, 0);float pb = matX0(1, 0);float pc = matX0(2, 0);float pd = 1;// 单位法向量float ps = sqrt(pa * pa + pb * pb + pc * pc);pa /= ps; pb /= ps; pc /= ps; pd /= ps;// 检查平面是否合格,如果5个点中有点到平面的距离超过0.2m,那么认为这些点太分散了,不构成平面bool planeValid = true;for (int j = 0; j < 5; j++) {if (fabs(pa * laserCloudSurfFromMapDS->points[pointSearchInd[j]].x +pb * laserCloudSurfFromMapDS->points[pointSearchInd[j]].y +pc * laserCloudSurfFromMapDS->points[pointSearchInd[j]].z + pd) > 0.2) {planeValid = false;break;}}// 平面合格if (planeValid) {// 当前激光帧点到平面距离float pd2 = pa * pointSel.x + pb * pointSel.y + pc * pointSel.z + pd;float s = 1 - 0.9 * fabs(pd2) / sqrt(sqrt(pointSel.x * pointSel.x+ pointSel.y * pointSel.y + pointSel.z * pointSel.z));// 点到平面垂线单位法向量(其实等价于平面法向量)coeff.x = s * pa;coeff.y = s * pb;coeff.z = s * pc;// 点到平面距离coeff.intensity = s * pd2;if (s > 0.1) {// 当前激光帧平面点,加入匹配集合中laserCloudOriSurfVec[i] = pointOri;// 参数coeffSelSurfVec[i] = coeff;laserCloudOriSurfFlag[i] = true;}}}}}/*** 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合*/void combineOptimizationCoeffs(){// 遍历当前帧角点集合,提取出与局部map匹配上了的角点for (int i = 0; i < laserCloudCornerLastDSNum; ++i){if (laserCloudOriCornerFlag[i] == true){laserCloudOri->push_back(laserCloudOriCornerVec[i]);coeffSel->push_back(coeffSelCornerVec[i]);}}// 遍历当前帧平面点集合,提取出与局部map匹配上了的平面点for (int i = 0; i < laserCloudSurfLastDSNum; ++i){if (laserCloudOriSurfFlag[i] == true){laserCloudOri->push_back(laserCloudOriSurfVec[i]);coeffSel->push_back(coeffSelSurfVec[i]);}}// 清空标记std::fill(laserCloudOriCornerFlag.begin(), laserCloudOriCornerFlag.end(), false);std::fill(laserCloudOriSurfFlag.begin(), laserCloudOriSurfFlag.end(), false);}/*** scan-to-map优化* 对匹配特征点计算Jacobian矩阵,观测值为特征点到直线、平面的距离,构建高斯牛顿方程,迭代优化当前位姿,存transformTobeMapped* 公式推导:todo*/bool LMOptimization(int iterCount){// This optimization is from the original loam_velodyne by Ji Zhang, need to cope with coordinate transformation// lidar <- camera --- camera <- lidar// x = z --- x = y// y = x --- y = z// z = y --- z = x// roll = yaw --- roll = pitch// pitch = roll --- pitch = yaw// yaw = pitch --- yaw = roll// lidar -> camerafloat srx = sin(transformTobeMapped[1]);float crx = cos(transformTobeMapped[1]);float sry = sin(transformTobeMapped[2]);float cry = cos(transformTobeMapped[2]);float srz = sin(transformTobeMapped[0]);float crz = cos(transformTobeMapped[0]);// 当前帧匹配特征点数太少int laserCloudSelNum = laserCloudOri->size();if (laserCloudSelNum < 50) {return false;}cv::Mat matA(laserCloudSelNum, 6, CV_32F, cv::Scalar::all(0));cv::Mat matAt(6, laserCloudSelNum, CV_32F, cv::Scalar::all(0));cv::Mat matAtA(6, 6, CV_32F, cv::Scalar::all(0));cv::Mat matB(laserCloudSelNum, 1, CV_32F, cv::Scalar::all(0));cv::Mat matAtB(6, 1, CV_32F, cv::Scalar::all(0));cv::Mat matX(6, 1, CV_32F, cv::Scalar::all(0));PointType pointOri, coeff;// 遍历匹配特征点,构建Jacobian矩阵for (int i = 0; i < laserCloudSelNum; i++) {// lidar -> camera todopointOri.x = laserCloudOri->points[i].y;pointOri.y = laserCloudOri->points[i].z;pointOri.z = laserCloudOri->points[i].x;// lidar -> cameracoeff.x = coeffSel->points[i].y;coeff.y = coeffSel->points[i].z;coeff.z = coeffSel->points[i].x;coeff.intensity = coeffSel->points[i].intensity;// in camerafloat arx = (crx*sry*srz*pointOri.x + crx*crz*sry*pointOri.y - srx*sry*pointOri.z) * coeff.x+ (-srx*srz*pointOri.x - crz*srx*pointOri.y - crx*pointOri.z) * coeff.y+ (crx*cry*srz*pointOri.x + crx*cry*crz*pointOri.y - cry*srx*pointOri.z) * coeff.z;float ary = ((cry*srx*srz - crz*sry)*pointOri.x + (sry*srz + cry*crz*srx)*pointOri.y + crx*cry*pointOri.z) * coeff.x+ ((-cry*crz - srx*sry*srz)*pointOri.x + (cry*srz - crz*srx*sry)*pointOri.y - crx*sry*pointOri.z) * coeff.z;float arz = ((crz*srx*sry - cry*srz)*pointOri.x + (-cry*crz-srx*sry*srz)*pointOri.y)*coeff.x+ (crx*crz*pointOri.x - crx*srz*pointOri.y) * coeff.y+ ((sry*srz + cry*crz*srx)*pointOri.x + (crz*sry-cry*srx*srz)*pointOri.y)*coeff.z;// lidar -> cameramatA.at(i, 0) = arz;matA.at(i, 1) = arx;matA.at(i, 2) = ary;matA.at(i, 3) = coeff.z;matA.at(i, 4) = coeff.x;matA.at(i, 5) = coeff.y;// 点到直线距离、平面距离,作为观测值matB.at(i, 0) = -coeff.intensity;}cv::transpose(matA, matAt);matAtA = matAt * matA;matAtB = matAt * matB;// J^T·J·delta_x = -J^T·f 高斯牛顿cv::solve(matAtA, matAtB, matX, cv::DECOMP_QR);// 首次迭代,检查近似Hessian矩阵(J^T·J)是否退化,或者称为奇异,行列式值=0 todoif (iterCount == 0) {cv::Mat matE(1, 6, CV_32F, cv::Scalar::all(0));cv::Mat matV(6, 6, CV_32F, cv::Scalar::all(0));cv::Mat matV2(6, 6, CV_32F, cv::Scalar::all(0));cv::eigen(matAtA, matE, matV);matV.copyTo(matV2);isDegenerate = false;float eignThre[6] = {100, 100, 100, 100, 100, 100};for (int i = 5; i >= 0; i--) {if (matE.at(0, i) < eignThre[i]) {for (int j = 0; j < 6; j++) {matV2.at(i, j) = 0;}isDegenerate = true;} else {break;}}matP = matV.inv() * matV2;}if (isDegenerate){cv::Mat matX2(6, 1, CV_32F, cv::Scalar::all(0));matX.copyTo(matX2);matX = matP * matX2;}// 更新当前位姿 x = x + delta_xtransformTobeMapped[0] += matX.at(0, 0);transformTobeMapped[1] += matX.at(1, 0);transformTobeMapped[2] += matX.at(2, 0);transformTobeMapped[3] += matX.at(3, 0);transformTobeMapped[4] += matX.at(4, 0);transformTobeMapped[5] += matX.at(5, 0);float deltaR = sqrt(pow(pcl::rad2deg(matX.at(0, 0)), 2) +pow(pcl::rad2deg(matX.at(1, 0)), 2) +pow(pcl::rad2deg(matX.at(2, 0)), 2));float deltaT = sqrt(pow(matX.at(3, 0) * 100, 2) +pow(matX.at(4, 0) * 100, 2) +pow(matX.at(5, 0) * 100, 2));// delta_x很小,认为收敛if (deltaR < 0.05 && deltaT < 0.05) {return true; }return false; }/*** scan-to-map优化当前帧位姿* 1、要求当前帧特征点数量足够多,且匹配的点数够多,才执行优化* 2、迭代30次(上限)优化* 1) 当前激光帧角点寻找局部map匹配点* a.更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了* b.计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数* 2) 当前激光帧平面点寻找局部map匹配点* a.更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了* b.计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数* 3) 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合* 4) 对匹配特征点计算Jacobian矩阵,观测值为特征点到直线、平面的距离,构建高斯牛顿方程,迭代优化当前位姿,存transformTobeMapped* 3、用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标*/void scan2MapOptimization(){// 要求有关键帧if (cloudKeyPoses3D->points.empty())return;// 当前激光帧的角点、平面点数量足够多if (laserCloudCornerLastDSNum > edgeFeatureMinValidNum && laserCloudSurfLastDSNum > surfFeatureMinValidNum){// kdtree输入为局部map点云kdtreeCornerFromMap->setInputCloud(laserCloudCornerFromMapDS);kdtreeSurfFromMap->setInputCloud(laserCloudSurfFromMapDS);// 迭代30次for (int iterCount = 0; iterCount < 30; iterCount++){// 每次迭代清空特征点集合laserCloudOri->clear();coeffSel->clear();// 当前激光帧角点寻找局部map匹配点// 1、更新当前帧位姿,将当前帧角点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成直线(用距离中心点的协方差矩阵,特征值进行判断),则认为匹配上了// 2、计算当前帧角点到直线的距离、垂线的单位向量,存储为角点参数cornerOptimization();// 当前激光帧平面点寻找局部map匹配点// 1、更新当前帧位姿,将当前帧平面点坐标变换到map系下,在局部map中查找5个最近点,距离小于1m,且5个点构成平面(最小二乘拟合平面),则认为匹配上了// 2、计算当前帧平面点到平面的距离、垂线的单位向量,存储为平面点参数surfOptimization();// 提取当前帧中与局部map匹配上了的角点、平面点,加入同一集合combineOptimizationCoeffs();// scan-to-map优化// 对匹配特征点计算Jacobian矩阵,观测值为特征点到直线、平面的距离,构建高斯牛顿方程,迭代优化当前位姿,存transformTobeMappedif (LMOptimization(iterCount) == true)break; }// 用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标transformUpdate();} else {ROS_WARN("Not enough features! Only %d edge and %d planar features available.", laserCloudCornerLastDSNum, laserCloudSurfLastDSNum);}}/*** 用imu原始RPY数据与scan-to-map优化后的位姿进行加权融合,更新当前帧位姿的roll、pitch,约束z坐标*/void transformUpdate(){if (cloudInfo.imuAvailable == true){// 俯仰角小于1.4if (std::abs(cloudInfo.imuPitchInit) < 1.4){double imuWeight = imuRPYWeight;tf::Quaternion imuQuaternion;tf::Quaternion transformQuaternion;double rollMid, pitchMid, yawMid;// roll角求加权均值,用scan-to-map优化得到的位姿与imu原始RPY数据,进行加权平均transformQuaternion.setRPY(transformTobeMapped[0], 0, 0);imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);transformTobeMapped[0] = rollMid;// pitch角求加权均值,用scan-to-map优化得到的位姿与imu原始RPY数据,进行加权平均transformQuaternion.setRPY(0, transformTobeMapped[1], 0);imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);transformTobeMapped[1] = pitchMid;}}// 更新当前帧位姿的roll, pitch, z坐标;因为是小车,roll、pitch是相对稳定的,不会有很大变动,一定程度上可以信赖imu的数据,z是进行高度约束transformTobeMapped[0] = constraintTransformation(transformTobeMapped[0], rotation_tollerance);transformTobeMapped[1] = constraintTransformation(transformTobeMapped[1], rotation_tollerance);transformTobeMapped[5] = constraintTransformation(transformTobeMapped[5], z_tollerance);// 当前帧位姿incrementalOdometryAffineBack = trans2Affine3f(transformTobeMapped);}/*** 值约束*/float constraintTransformation(float value, float limit){if (value < -limit)value = -limit;if (value > limit)value = limit;return value;}/*** 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧*/bool saveFrame(){if (cloudKeyPoses3D->points.empty())return true;// 前一帧位姿Eigen::Affine3f transStart = pclPointToAffine3f(cloudKeyPoses6D->back());// 当前帧位姿Eigen::Affine3f transFinal = pcl::getTransformation(transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5], transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);// 位姿变换增量Eigen::Affine3f transBetween = transStart.inverse() * transFinal;float x, y, z, roll, pitch, yaw;pcl::getTranslationAndEulerAngles(transBetween, x, y, z, roll, pitch, yaw);// 旋转和平移量都较小,当前帧不设为关键帧if (abs(roll) < surroundingkeyframeAddingAngleThreshold &&abs(pitch) < surroundingkeyframeAddingAngleThreshold && abs(yaw) < surroundingkeyframeAddingAngleThreshold &&sqrt(x*x + y*y + z*z) < surroundingkeyframeAddingDistThreshold)return false;return true;}/*** 添加激光里程计因子*/void addOdomFactor(){if (cloudKeyPoses3D->points.empty()){// 第一帧初始化先验因子noiseModel::Diagonal::shared_ptr priorNoise = noiseModel::Diagonal::Variances((Vector(6) << 1e-2, 1e-2, M_PI*M_PI, 1e8, 1e8, 1e8).finished()); // rad*rad, meter*metergtSAMgraph.add(PriorFactor(0, trans2gtsamPose(transformTobeMapped), priorNoise));// 变量节点设置初始值initialEstimate.insert(0, trans2gtsamPose(transformTobeMapped));}else{// 添加激光里程计因子noiseModel::Diagonal::shared_ptr odometryNoise = noiseModel::Diagonal::Variances((Vector(6) << 1e-6, 1e-6, 1e-6, 1e-4, 1e-4, 1e-4).finished());gtsam::Pose3 poseFrom = pclPointTogtsamPose3(cloudKeyPoses6D->points.back());gtsam::Pose3 poseTo = trans2gtsamPose(transformTobeMapped);// 参数:前一帧id,当前帧id,前一帧与当前帧的位姿变换(作为观测值),噪声协方差gtSAMgraph.add(BetweenFactor(cloudKeyPoses3D->size()-1, cloudKeyPoses3D->size(), poseFrom.between(poseTo), odometryNoise));// 变量节点设置初始值initialEstimate.insert(cloudKeyPoses3D->size(), poseTo);}}/*** 添加GPS因子*/void addGPSFactor(){if (gpsQueue.empty())return;// 如果没有关键帧,或者首尾关键帧距离小于5m,不添加gps因子if (cloudKeyPoses3D->points.empty())return;else{if (pointDistance(cloudKeyPoses3D->front(), cloudKeyPoses3D->back()) < 5.0)return;}// 位姿协方差很小,没必要加入GPS数据进行校正if (poseCovariance(3,3) < poseCovThreshold && poseCovariance(4,4) < poseCovThreshold)return;static PointType lastGPSPoint;while (!gpsQueue.empty()){// 删除当前帧0.2s之前的里程计if (gpsQueue.front().header.stamp.toSec() < timeLaserInfoCur - 0.2){gpsQueue.pop_front();}// 超过当前帧0.2s之后,退出else if (gpsQueue.front().header.stamp.toSec() > timeLaserInfoCur + 0.2){break;}else{nav_msgs::Odometry thisGPS = gpsQueue.front();gpsQueue.pop_front();// GPS噪声协方差太大,不能用float noise_x = thisGPS.pose.covariance[0];float noise_y = thisGPS.pose.covariance[7];float noise_z = thisGPS.pose.covariance[14];if (noise_x > gpsCovThreshold || noise_y > gpsCovThreshold)continue;// GPS里程计位置float gps_x = thisGPS.pose.pose.position.x;float gps_y = thisGPS.pose.pose.position.y;float gps_z = thisGPS.pose.pose.position.z;if (!useGpsElevation){gps_z = transformTobeMapped[5];noise_z = 0.01;}// (0,0,0)无效数据if (abs(gps_x) < 1e-6 && abs(gps_y) < 1e-6)continue;// 每隔5m添加一个GPS里程计PointType curGPSPoint;curGPSPoint.x = gps_x;curGPSPoint.y = gps_y;curGPSPoint.z = gps_z;if (pointDistance(curGPSPoint, lastGPSPoint) < 5.0)continue;elselastGPSPoint = curGPSPoint;// 添加GPS因子gtsam::Vector Vector3(3);Vector3 << max(noise_x, 1.0f), max(noise_y, 1.0f), max(noise_z, 1.0f);noiseModel::Diagonal::shared_ptr gps_noise = noiseModel::Diagonal::Variances(Vector3);gtsam::GPSFactor gps_factor(cloudKeyPoses3D->size(), gtsam::Point3(gps_x, gps_y, gps_z), gps_noise);gtSAMgraph.add(gps_factor);aLoopIsClosed = true;break;}}}/*** 添加闭环因子*/void addLoopFactor(){if (loopIndexQueue.empty())return;// 闭环队列for (int i = 0; i < (int)loopIndexQueue.size(); ++i){// 闭环边对应两帧的索引int indexFrom = loopIndexQueue[i].first;int indexTo = loopIndexQueue[i].second;// 闭环边的位姿变换gtsam::Pose3 poseBetween = loopPoseQueue[i];gtsam::noiseModel::Diagonal::shared_ptr noiseBetween = loopNoiseQueue[i];gtSAMgraph.add(BetweenFactor(indexFrom, indexTo, poseBetween, noiseBetween));}loopIndexQueue.clear();loopPoseQueue.clear();loopNoiseQueue.clear();aLoopIsClosed = true;}/*** 设置当前帧为关键帧并执行因子图优化* 1、计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧* 2、添加激光里程计因子、GPS因子、闭环因子* 3、执行因子图优化* 4、得到当前帧优化后位姿,位姿协方差* 5、添加cloudKeyPoses3D,cloudKeyPoses6D,更新transformTobeMapped,添加当前关键帧的角点、平面点集合*/void saveKeyFramesAndFactor(){// 计算当前帧与前一帧位姿变换,如果变化太小,不设为关键帧,反之设为关键帧if (saveFrame() == false)return;// 激光里程计因子addOdomFactor();// GPS因子addGPSFactor();// 闭环因子addLoopFactor();// cout << "****************************************************" << endl;// gtSAMgraph.print("GTSAM Graph:\n");// 执行优化isam->update(gtSAMgraph, initialEstimate);isam->update();if (aLoopIsClosed == true){isam->update();isam->update();isam->update();isam->update();isam->update();}// update之后要清空一下保存的因子图,注:历史数据不会清掉,ISAM保存起来了gtSAMgraph.resize(0);initialEstimate.clear();PointType thisPose3D;PointTypePose thisPose6D;Pose3 latestEstimate;// 优化结果isamCurrentEstimate = isam->calculateEstimate();// 当前帧位姿结果latestEstimate = isamCurrentEstimate.at(isamCurrentEstimate.size()-1);// cout << "****************************************************" << endl;// isamCurrentEstimate.print("Current estimate: ");// cloudKeyPoses3D加入当前帧位姿thisPose3D.x = latestEstimate.translation().x();thisPose3D.y = latestEstimate.translation().y();thisPose3D.z = latestEstimate.translation().z();// 索引thisPose3D.intensity = cloudKeyPoses3D->size(); cloudKeyPoses3D->push_back(thisPose3D);// cloudKeyPoses6D加入当前帧位姿thisPose6D.x = thisPose3D.x;thisPose6D.y = thisPose3D.y;thisPose6D.z = thisPose3D.z;thisPose6D.intensity = thisPose3D.intensity ; thisPose6D.roll = latestEstimate.rotation().roll();thisPose6D.pitch = latestEstimate.rotation().pitch();thisPose6D.yaw = latestEstimate.rotation().yaw();thisPose6D.time = timeLaserInfoCur;cloudKeyPoses6D->push_back(thisPose6D);// cout << "****************************************************" << endl;// cout << "Pose covariance:" << endl;// cout << isam->marginalCovariance(isamCurrentEstimate.size()-1) << endl << endl;// 位姿协方差poseCovariance = isam->marginalCovariance(isamCurrentEstimate.size()-1);// transformTobeMapped更新当前帧位姿transformTobeMapped[0] = latestEstimate.rotation().roll();transformTobeMapped[1] = latestEstimate.rotation().pitch();transformTobeMapped[2] = latestEstimate.rotation().yaw();transformTobeMapped[3] = latestEstimate.translation().x();transformTobeMapped[4] = latestEstimate.translation().y();transformTobeMapped[5] = latestEstimate.translation().z();// 当前帧激光角点、平面点,降采样集合pcl::PointCloud::Ptr thisCornerKeyFrame(new pcl::PointCloud());pcl::PointCloud::Ptr thisSurfKeyFrame(new pcl::PointCloud());pcl::copyPointCloud(*laserCloudCornerLastDS, *thisCornerKeyFrame);pcl::copyPointCloud(*laserCloudSurfLastDS, *thisSurfKeyFrame);// 保存特征点降采样集合cornerCloudKeyFrames.push_back(thisCornerKeyFrame);surfCloudKeyFrames.push_back(thisSurfKeyFrame);// 更新里程计轨迹updatePath(thisPose6D);}/*** 更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿,更新里程计轨迹*/void correctPoses(){if (cloudKeyPoses3D->points.empty())return;if (aLoopIsClosed == true){// 清空局部maplaserCloudMapContainer.clear();// 清空里程计轨迹globalPath.poses.clear();// 更新因子图中所有变量节点的位姿,也就是所有历史关键帧的位姿int numPoses = isamCurrentEstimate.size();for (int i = 0; i < numPoses; ++i){cloudKeyPoses3D->points[i].x = isamCurrentEstimate.at(i).translation().x();cloudKeyPoses3D->points[i].y = isamCurrentEstimate.at(i).translation().y();cloudKeyPoses3D->points[i].z = isamCurrentEstimate.at(i).translation().z();cloudKeyPoses6D->points[i].x = cloudKeyPoses3D->points[i].x;cloudKeyPoses6D->points[i].y = cloudKeyPoses3D->points[i].y;cloudKeyPoses6D->points[i].z = cloudKeyPoses3D->points[i].z;cloudKeyPoses6D->points[i].roll = isamCurrentEstimate.at(i).rotation().roll();cloudKeyPoses6D->points[i].pitch = isamCurrentEstimate.at(i).rotation().pitch();cloudKeyPoses6D->points[i].yaw = isamCurrentEstimate.at(i).rotation().yaw();// 更新里程计轨迹updatePath(cloudKeyPoses6D->points[i]);}aLoopIsClosed = false;}}/*** 更新里程计轨迹*/void updatePath(const PointTypePose& pose_in){geometry_msgs::PoseStamped pose_stamped;pose_stamped.header.stamp = ros::Time().fromSec(pose_in.time);pose_stamped.header.frame_id = odometryFrame;pose_stamped.pose.position.x = pose_in.x;pose_stamped.pose.position.y = pose_in.y;pose_stamped.pose.position.z = pose_in.z;tf::Quaternion q = tf::createQuaternionFromRPY(pose_in.roll, pose_in.pitch, pose_in.yaw);pose_stamped.pose.orientation.x = q.x();pose_stamped.pose.orientation.y = q.y();pose_stamped.pose.orientation.z = q.z();pose_stamped.pose.orientation.w = q.w();globalPath.poses.push_back(pose_stamped);}/*** 发布激光里程计*/void publishOdometry(){// 发布激光里程计,odom等价mapnav_msgs::Odometry laserOdometryROS;laserOdometryROS.header.stamp = timeLaserInfoStamp;laserOdometryROS.header.frame_id = odometryFrame;laserOdometryROS.child_frame_id = "odom_mapping";laserOdometryROS.pose.pose.position.x = transformTobeMapped[3];laserOdometryROS.pose.pose.position.y = transformTobeMapped[4];laserOdometryROS.pose.pose.position.z = transformTobeMapped[5];laserOdometryROS.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]);pubLaserOdometryGlobal.publish(laserOdometryROS);// 发布TF,odom->lidarstatic tf::TransformBroadcaster br;tf::Transform t_odom_to_lidar = tf::Transform(tf::createQuaternionFromRPY(transformTobeMapped[0], transformTobeMapped[1], transformTobeMapped[2]),tf::Vector3(transformTobeMapped[3], transformTobeMapped[4], transformTobeMapped[5]));tf::StampedTransform trans_odom_to_lidar = tf::StampedTransform(t_odom_to_lidar, timeLaserInfoStamp, odometryFrame, "lidar_link");br.sendTransform(trans_odom_to_lidar);// Publish odometry for ROS (incremental)static bool lastIncreOdomPubFlag = false;static nav_msgs::Odometry laserOdomIncremental;static Eigen::Affine3f increOdomAffine; if (lastIncreOdomPubFlag == false){lastIncreOdomPubFlag = true;laserOdomIncremental = laserOdometryROS;increOdomAffine = trans2Affine3f(transformTobeMapped);} else {// 当前帧与前一帧之间的位姿变换Eigen::Affine3f affineIncre = incrementalOdometryAffineFront.inverse() * incrementalOdometryAffineBack;// 还是当前帧位姿(打印一下数据,可以看到与激光里程计的位姿transformTobeMapped是一样的)increOdomAffine = increOdomAffine * affineIncre;float x, y, z, roll, pitch, yaw;pcl::getTranslationAndEulerAngles (increOdomAffine, x, y, z, roll, pitch, yaw);if (cloudInfo.imuAvailable == true){if (std::abs(cloudInfo.imuPitchInit) < 1.4){double imuWeight = 0.1;tf::Quaternion imuQuaternion;tf::Quaternion transformQuaternion;double rollMid, pitchMid, yawMid;// roll姿态角加权平均transformQuaternion.setRPY(roll, 0, 0);imuQuaternion.setRPY(cloudInfo.imuRollInit, 0, 0);tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);roll = rollMid;// pitch姿态角加权平均transformQuaternion.setRPY(0, pitch, 0);imuQuaternion.setRPY(0, cloudInfo.imuPitchInit, 0);tf::Matrix3x3(transformQuaternion.slerp(imuQuaternion, imuWeight)).getRPY(rollMid, pitchMid, yawMid);pitch = pitchMid;}}laserOdomIncremental.header.stamp = timeLaserInfoStamp;laserOdomIncremental.header.frame_id = odometryFrame;laserOdomIncremental.child_frame_id = "odom_mapping";laserOdomIncremental.pose.pose.position.x = x;laserOdomIncremental.pose.pose.position.y = y;laserOdomIncremental.pose.pose.position.z = z;laserOdomIncremental.pose.pose.orientation = tf::createQuaternionMsgFromRollPitchYaw(roll, pitch, yaw);if (isDegenerate)laserOdomIncremental.pose.covariance[0] = 1;elselaserOdomIncremental.pose.covariance[0] = 0;}pubLaserOdometryIncremental.publish(laserOdomIncremental);}/*** 发布里程计、点云、轨迹* 1、发布历史关键帧位姿集合* 2、发布局部map的降采样平面点集合* 3、发布历史帧(累加的)的角点、平面点降采样集合* 4、发布里程计轨迹*/void publishFrames(){if (cloudKeyPoses3D->points.empty())return;// 发布历史关键帧位姿集合publishCloud(&pubKeyPoses, cloudKeyPoses3D, timeLaserInfoStamp, odometryFrame);// 发布局部map的降采样平面点集合publishCloud(&pubRecentKeyFrames, laserCloudSurfFromMapDS, timeLaserInfoStamp, odometryFrame);// 发布历史帧(累加的)的角点、平面点降采样集合if (pubRecentKeyFrame.getNumSubscribers() != 0){pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());PointTypePose thisPose6D = trans2PointTypePose(transformTobeMapped);*cloudOut += *transformPointCloud(laserCloudCornerLastDS, &thisPose6D);*cloudOut += *transformPointCloud(laserCloudSurfLastDS, &thisPose6D);publishCloud(&pubRecentKeyFrame, cloudOut, timeLaserInfoStamp, odometryFrame);}// 发布当前帧原始点云配准之后的点云if (pubCloudRegisteredRaw.getNumSubscribers() != 0){pcl::PointCloud::Ptr cloudOut(new pcl::PointCloud());pcl::fromROSMsg(cloudInfo.cloud_deskewed, *cloudOut);PointTypePose thisPose6D = trans2PointTypePose(transformTobeMapped);*cloudOut = *transformPointCloud(cloudOut, &thisPose6D);publishCloud(&pubCloudRegisteredRaw, cloudOut, timeLaserInfoStamp, odometryFrame);}// 发布里程计轨迹if (pubPath.getNumSubscribers() != 0){globalPath.header.stamp = timeLaserInfoStamp;globalPath.header.frame_id = odometryFrame;pubPath.publish(globalPath);}}

};int main(int argc, char** argv)

{ros::init(argc, argv, "lio_sam");mapOptimization MO;ROS_INFO("\033[1;32m----> Map Optimization Started.\033[0m");std::thread loopthread(&mapOptimization::loopClosureThread, &MO);std::thread visualizeMapThread(&mapOptimization::visualizeGlobalMapThread, &MO);ros::spin();loopthread.join();visualizeMapThread.join();return 0;

}

参考文献

LIOSAM代码架构 - 知乎

SLAM学习笔记(二十)LIO-SAM流程及代码详解(最全)_zkk9527的博客-CSDN博客_lio-sam